Category Archives: Uncategorized

Zoho Writer: An excellent online alternative

I recently gave my opinions on OpenOffice.org’s word processing software Writer. My conclusion was that while they have certainly made improvements over the last twenty years, it still just doesn’t hold up to Microsoft’s behemoth package, either online or off. However, that doesn’t mean there isn’t an alternative for those who seek: I also mentioned in that post I would be reviewing Zoho Writer, the online word processor from (obviously) Zoho, which turns out to be a very competent competitor to Word. It’s not the perfect replacement, but it comes pretty close for everyday use, and then some.

Zoho offers many applications and services, all of which are relatively competent. And I’m not talking just basic office suite stuff either; they have everything from CRM platforms to retail inventory management. For an online service, they have a surprising breadth of applications on order.

As you can see, there is a lot there. For this review I’ll be focusing on their word processor Writer, which I feel is a highlight of their office productivity suite, but it’s important to note the range of capabilities they have, especially as compared to other online options. I also want to mention that their PowerPoint equivalent, Show, is absolutely fantastic as well, while their version of Excel, Sheet, is also very good. It doesn’t quite live up to the rest of the suite in terms of functionality or design, but that’s not to say it’s not good – it is. But it has significant room for improvement, especially considering its less involved and less usable interface, with sparsely-populated toolbars, buried commands, and no sidebar, especially when considering it’s for such a powerful program as a spreadsheet. Indeed, it’s the one instance in which I can say OpenOffice’s alternative, Calc, is the better option, with more features, a more familiar interface, and better design all around.

That being said, Zoho is the superior option for everything else, and OpenOffice doesn’t provide any enterprise functionality or features beyond the basic office suite anyway. Here we’ll be talking about Writer, a name shared with the OpenOffice equivalent (so don’t mix them up!) and it will hopefully give a good impression of how it all works.

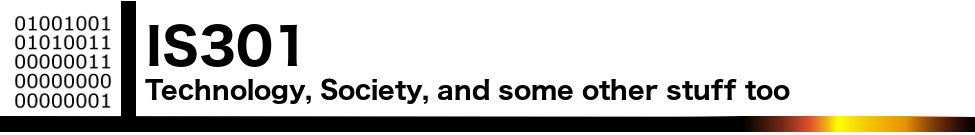

I threw together a nonsense document to mouse around with, and below you can see the spell check, which gives the initial suggestion as well as alternate suggestions, which is nice, although it doesn’t offer meanings / definitions as does Word. You can also see the Format menu in the sidebar to the left with all the functionality you would expect, including Cut / Paste / Format Painter / etc., as well as standard font and paragraph formatting options. Two additional features I think are very useful are the Quick Text option, which allows you to specify a particular piece of text that can be inserted with the click of a button or shortcut key; very good if you frequently use the same phrase or sentence or whatnot in a document. The other nice feature is evidenced by the very faint two boxes you can see in the left margin; the plus and text boxes. See those? You might have to look closely. The box with the plus is an insert menu, and the text box opens a formatting menu. They follow your insertion point automatically, and they turned out to be quite a convenience.

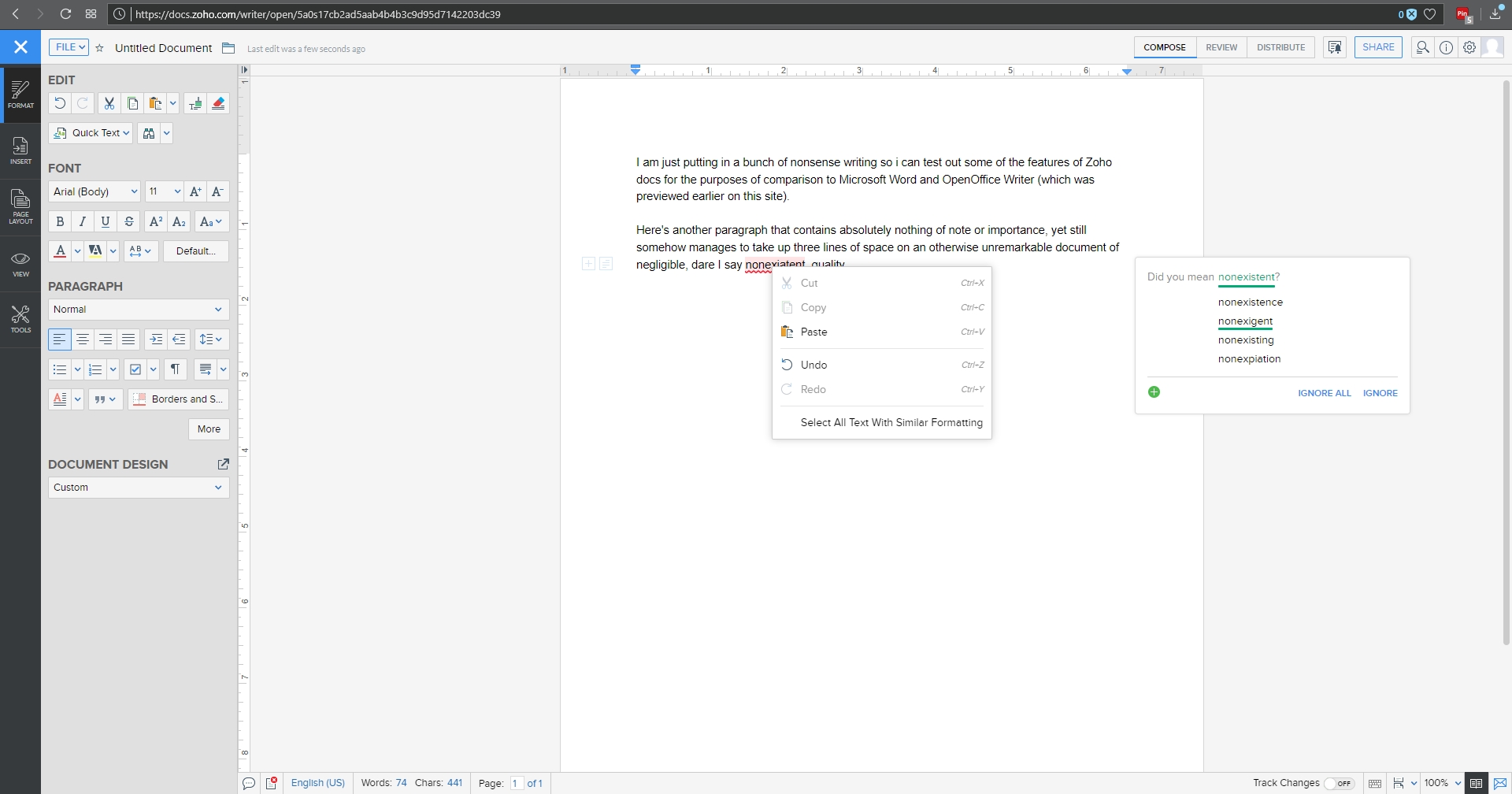

A variety of page layout templates are also readily available, with more available for download and very easy to implement.

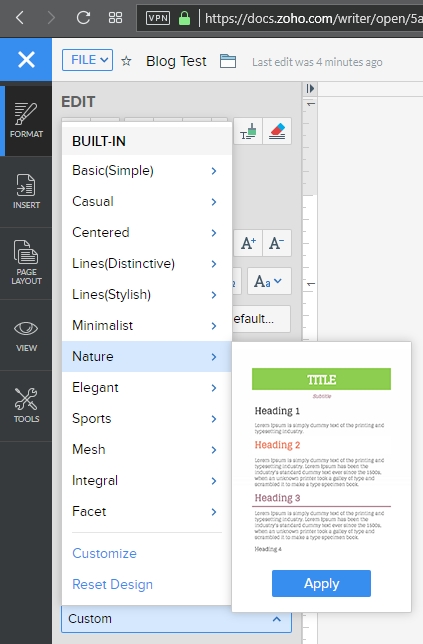

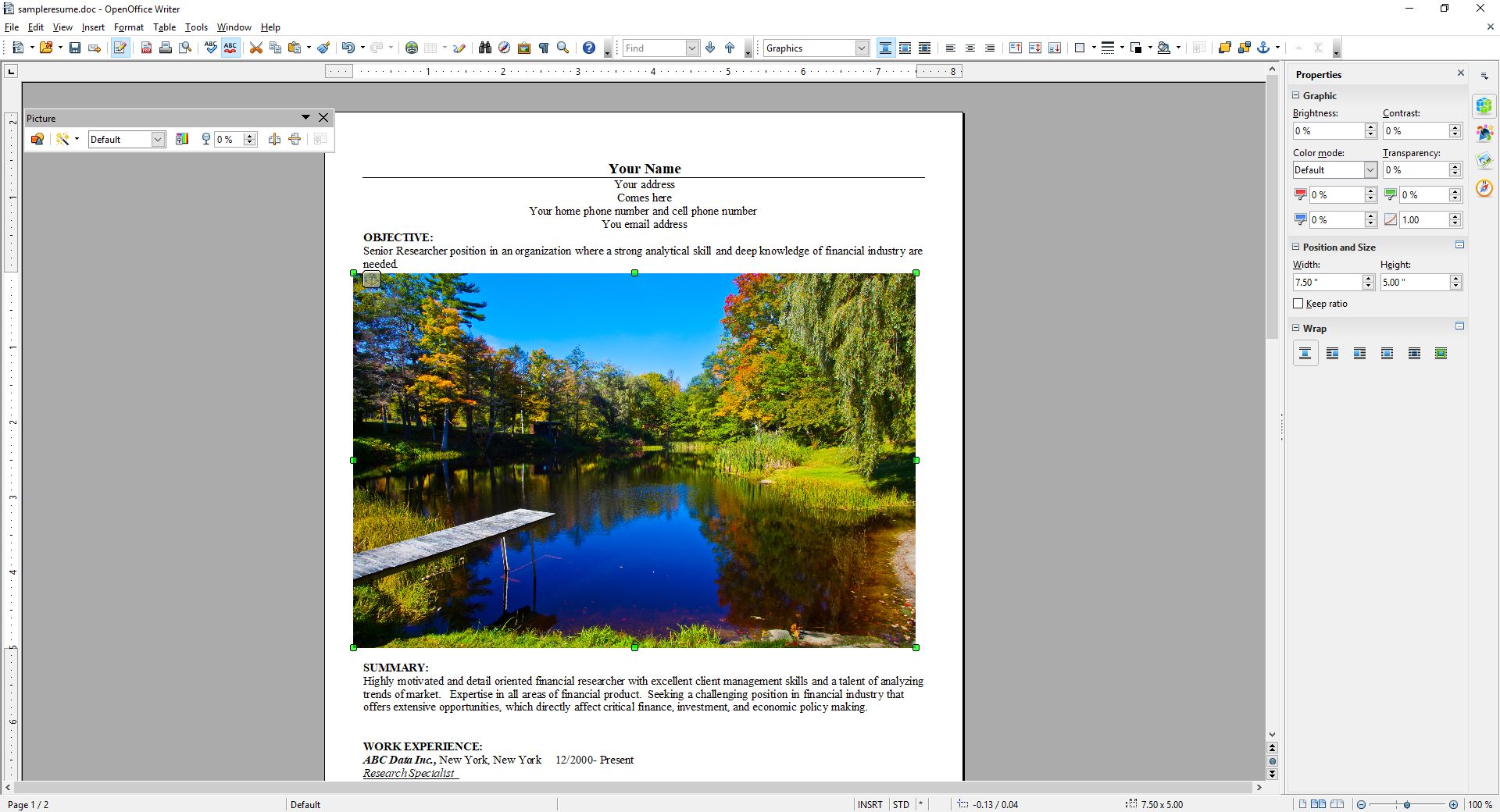

Although this document is hardly complex, inserting an image and table is something that can trip up even stalwart word processors, and that was true here as well. Inserting the image was easy and worked fine, however arranging it for word wrap was more problematic. Smaller images resulted in much more layout success.

Inserting a table was also quite easy, and I especially like the live preview it provided as I hovered the mouse over the design options, all of which were neatly contained in the Design tab of the table options window. This is very similar to how Word will open custom contextual tabs that provide additional functionality depending on the specific element you’re working with.

Manipulating the table was much easier than manipulating the picture, which wanted to jump around the page, and even manipulating individual cells was very smooth; everything from changing their dimensions to their color to their alignment and everything in between worked perfectly the first time.

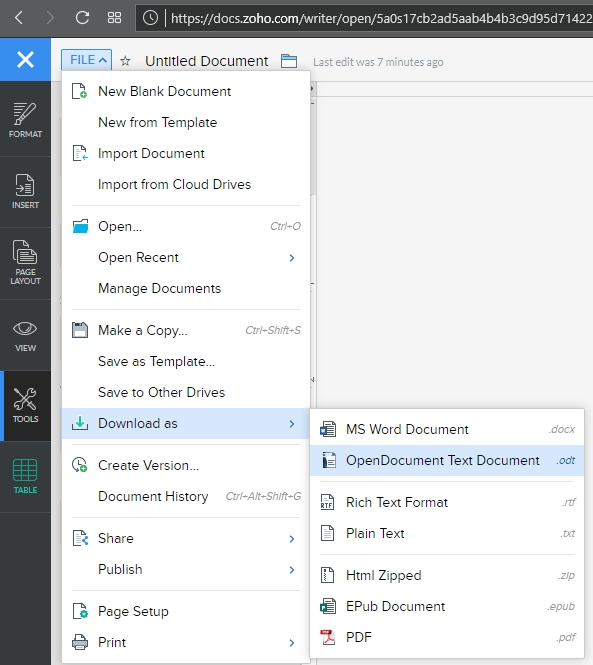

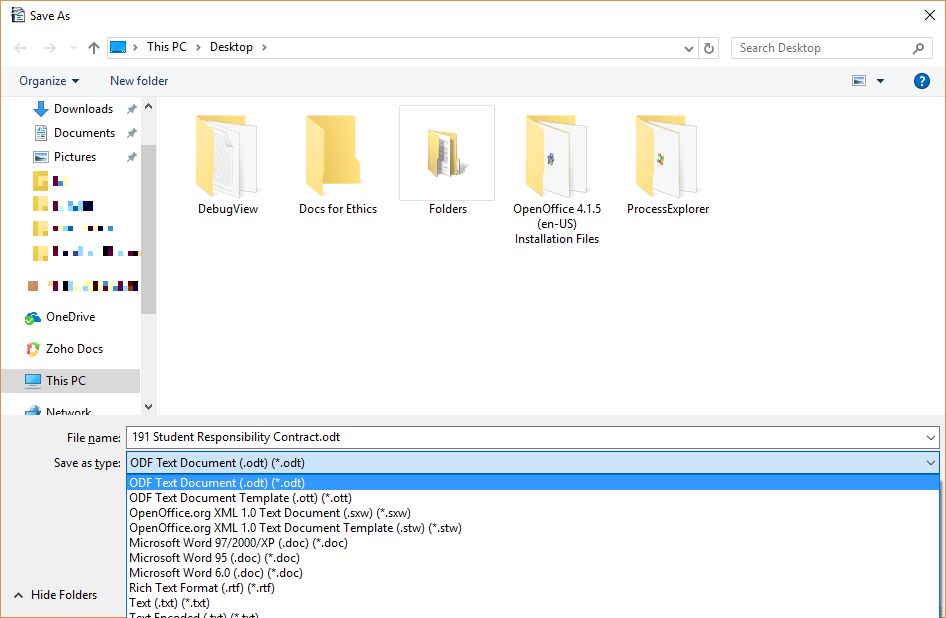

Now is when I need to mention the biggest advantage of (Zoho) Writer over (OpenOffice) Writer: .docx compatibility. OO Writer doesn’t have it, and that’s a huge knock, a terminal knock, actually, against it. With Zoho Writer, when saving a document, you have multiple options: You can save to a cloud service, and Zoho has collaborated with many as you can see (although I couldn’t help but notice the logo for OneDrive is oddly blurry, while the others are very clear), or you can download the file as a Microsoft Word .docx, or even as an .odt file, which is the open standard used by OpenOffice itself.

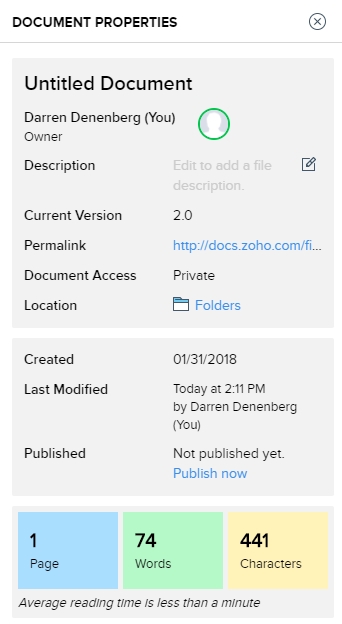

As one would hope, there are also multiple ways to get some statistics about your document. They run along the bottom as one would expect, but I am also a big fan of the Document Properties as accessed by clicking on the ‘i’ with a circle around it at the upper right of the window. Not only does this provide a wealth of information about the document itself, but coming from an HCI perspective, having the page count, word count and character count in individually shaded, easy to see and immediately locate, is genius. In terms of information presentation and information availability it puts those interrelated metrics right next to each other yet separated visually and thereby forces them in a cognitive whole.

Not to get too far off topic, but the reason I’m so enamored with this design choice is that humans, in order to make sense of, and order out of, information and stimuli presented to them, engage in several practices that help them in organizing what they see. One of them is known as Proximity, in which spatially similar images are considered as part of a larger group, even if they’re not related, so when they are related, as these three metrics are, our ability to process them, their meaning and their relation to each other is increased even more. Another is Similarity, in which when considering multiple individual field objects, those that are similar in some dimension, whether it be shape, color, orientation, or whatever else, are considered to be part of the same group, again even if they aren’t. Those are two reasons presenting that specific document information is so brilliant – it addresses the way we as humans process information.

Consider the Windows logo below. This is an example of both proximity and similarity. We consider the logo as a single shape because the four smaller squares that comprise it are both similar and proximal.

This is the Writer document properties pane, that uses these concepts to such great effect.

It even estimates the average reading time. There is nothing I don’t like about this information window, it’s one of the best interface designs I’ve ever seen, and I’ve seen a lot.

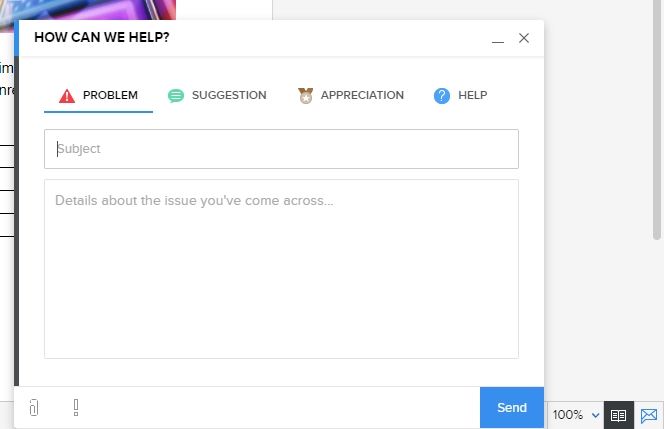

Before I just put up the rest of the menu dialogs for your perusal to give you an idea of how they’re implemented, I also want to address a nice usability touch they have incorporated in the form of a support icon. See that little blue envelope there way on the right hand side?

That’s the Writer support icon, and it opens up a window that allows you to offer feedback or ask questions directly. For a free service, that’s pretty remarkable. It really gives the idea – true or not – that they are at least receptive to feedback or requests for help. I was very surprised by that, and pleasantly so.

As you can probably tell, I think Writer is a great program. It’s not often I get to gush over software, but this company is so unknown, comparatively, yet they have such a well-designed and functional, free service, I’m surprised we don’t hear about it much more often. According to their webpage, they are a private company but one with five thousand employees! They hold user conferences and expos – that is not small time, yet their name recognition, even with millions of users, is small. If I may be so bold, even sacrilegious to some, I’ll even go so far as to say it destroys the offerings from Google. The only service that can compete is Microsoft’s Office 365, and even then it’s just a little better, plus Zoho integrates with all of them anyhow. Zoho has really created something great, and I encourage anyone interested in alternatives to standard office suites to give it a try.

Here are some functional dialogs if you’re interested in seeing all the options available before taking the plunge.

[Best_Wordpress_Gallery id=”8″ gal_title=”Zoho Dialogs”]

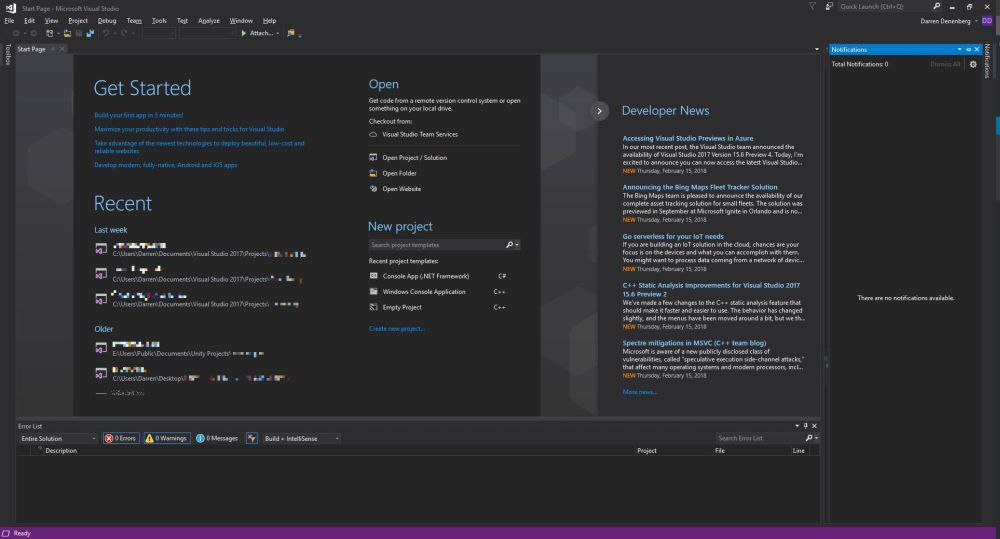

Fixing a component install error in Visual Studio Community 2017 (hint: It’s really easy)

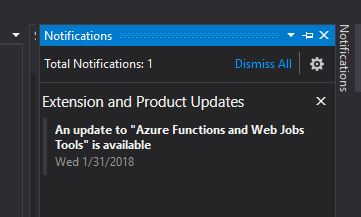

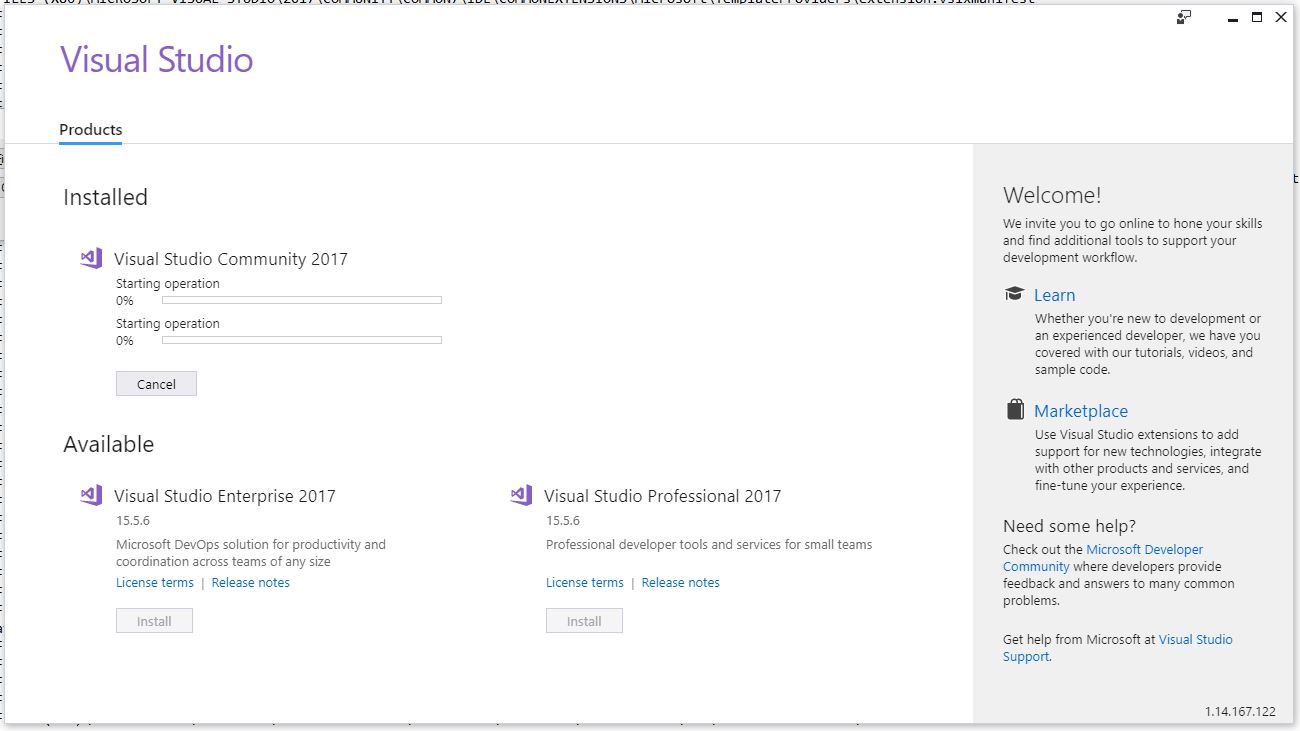

I recently noticed in the notification window of Visual Studio Community ’17 that there were some Azure-related updates available. Normally these updates lead to an uneventful experience in which I approve the updates, VS takes a loooong time to download install said updates, then everything moves forward as planned. This time, however, was slightly different so I thought I’d bring you all on my journey of discovery and illustrate why Visual Studio’s attempt to help me figure out what went wrong was so unhelpful. Even so, it’s an easy fix that I’m guessing many of you could figure out anyway. Indeed, my approach takes the slightly longer way round.

The story begins with the update notifications. This is a very common thing to happen with this particular Integrated Development Environment (IDE), and now that I think about it, I can’t recall a time there weren’t updates to be had. Anyway, as I mentioned they’re normally uneventful, and these two were for components I don’t use too terribly much – Azure Data Lake and Stream Analytics Tools, and Azure Functions and Web Jobs Tools. The former are tools to aid and assist with the streaming, gathering, and analysis of the massive amounts of data gathered from IoT connected devices, and that is an area in which I have significant interest, so these tools will be useful for me soon (I hope). Azure Functions and Web Jobs Tools are of less interest to me, but I can’t have an incomplete update hanging in the air above me. So, without thinking too much about it I clicked the notification, clicked on [Update], and went about my business.

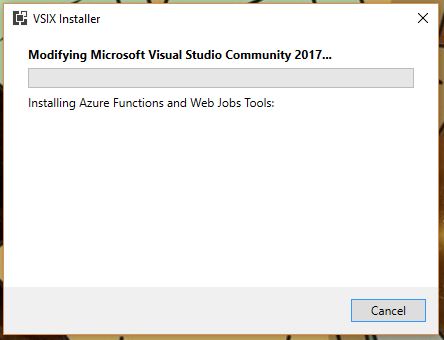

As expected, the process began without much of a hitch.

I should mention that when it comes to Visual Studio, you don’t ‘update’ or ‘patch,’ you instead ‘Modify.’ All just a big semantics issue. Anyway, although things had gone well up to this point, that was about to change.

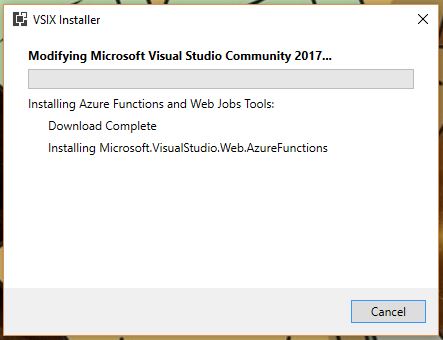

So the Data Lake and Stream Analytics tools installed fine, but the functions and Web Jobs tools was another story. I don’t even need those, and I probably should have let it go, but I just couldn’t. That red X would have haunted my dreams.

The obvious next step was to check the install log, as the Installer so helpfully suggested. Speaking of the installer, and if you’re interested, VSIX is a method of deploying updates to Visual Studio, and does so in the right place with the right components and so on. It’s a compressed file, and you can even rename a VSIX file to .zip and extract it. Just wanted to get that out of the way.

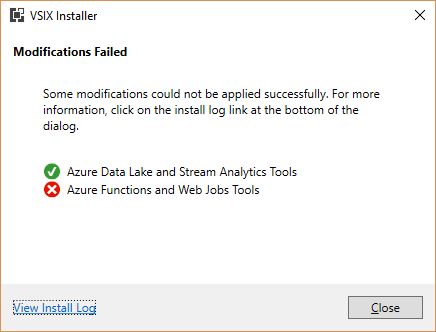

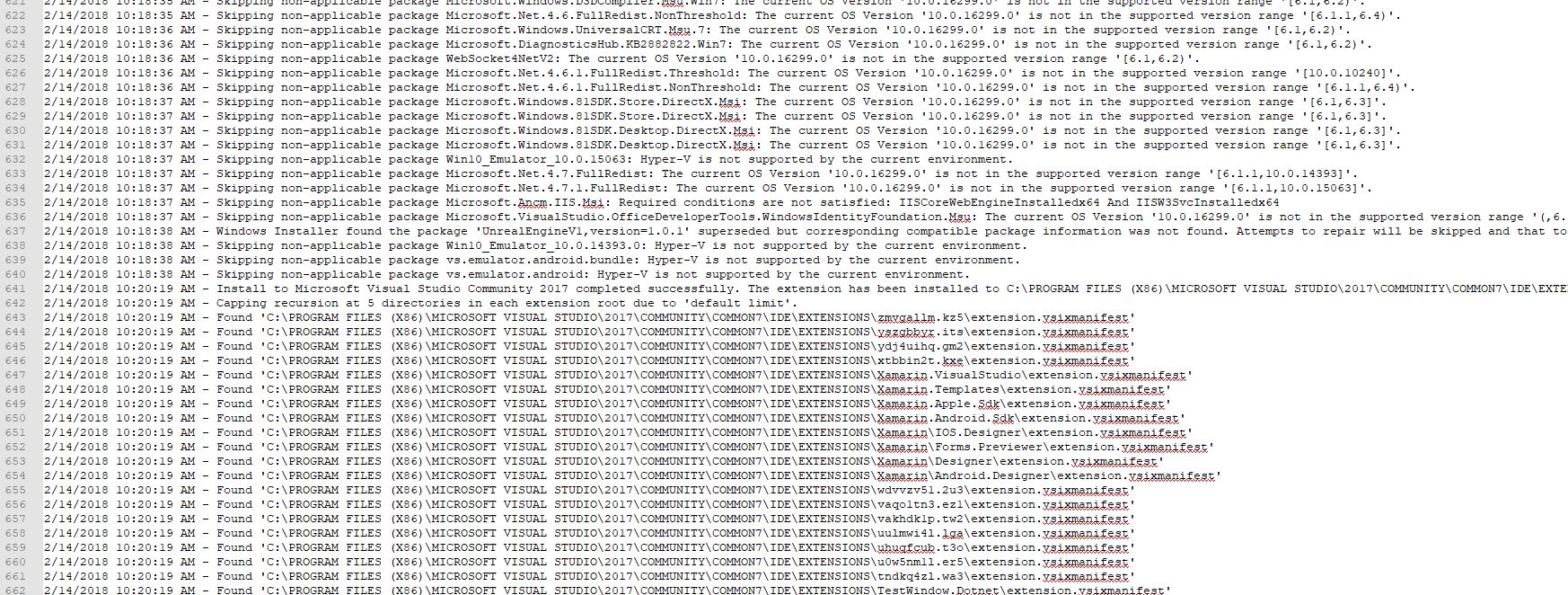

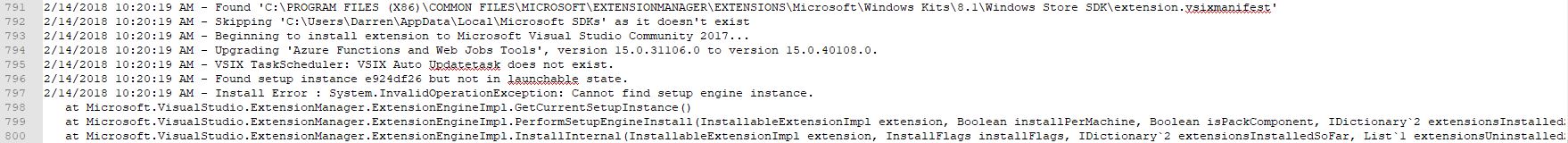

I clicked on “View Install Log,’ and the result was a log that was a breezy 865 lines long, and mainly, but not entirely, comprised of stuff that looked like this:

This log punches you in the gut twice, because A) You have to find the part that identifies the problem in those 800+ lines all on your own, and B) the part that identified this particular problem was all the way at the very end. Luckily, ctrl-F helped out significantly here.

Luckily, I knew, or at least suspected, that from here it would be easy. That the update task didn’t exist and the setup instance was not in a launchable state indicated to me that there was a disagreement between the installer and Visual Studio, and not that some fatal, unrecoverable error had happened. Fortunately, my suspicions turned out to be correct and it was really easy to fix. In fact, I could have just done it from within Visual Studio, but this is more illustrative. At least I think it is. Maybe it’s not.

I didn’t even need to go full control panel on it. I simply went in to the apps section of settings, scrolled down to Visual Studio, and selected ‘Modify.’

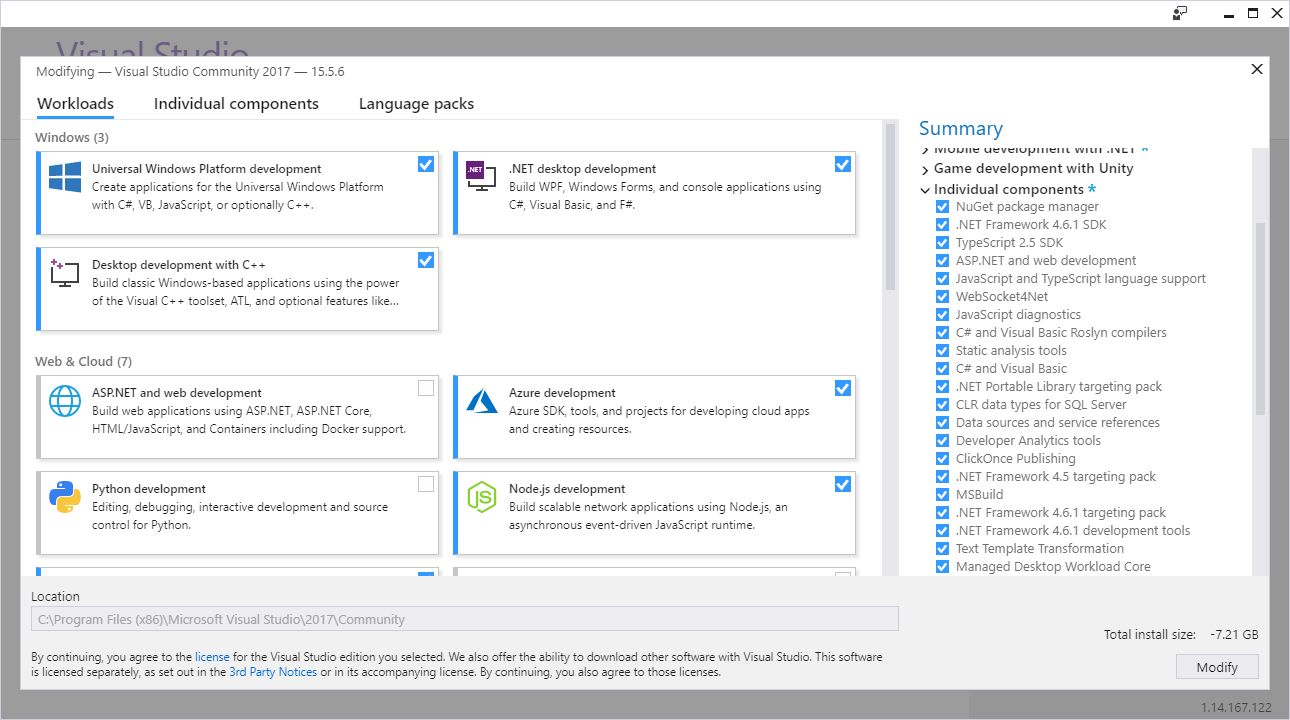

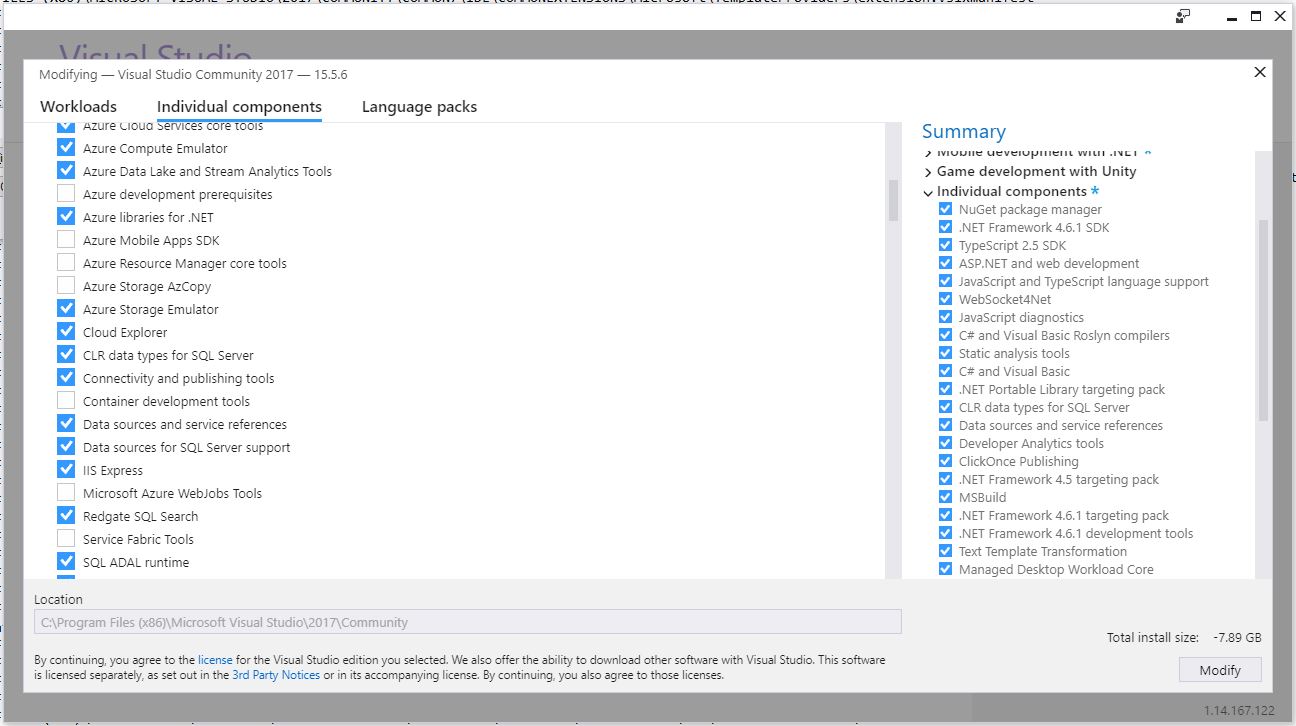

That brought up the Modification window with the ‘Workloads’ tab highlighted, but we want ‘Individual Components.’

You’ll notice that ‘Microsoft Azure WebJobs Tools’ isn’t highlighted, so easy fix. That’s also the reason I mentioned earlier that it could easily be repaired from within VS itself. Clicking that checkbox caused almost all the other checkboxes to become selected, and after clicking [Modify] there in the lower right-hand corner, the long, slow update process began anew!

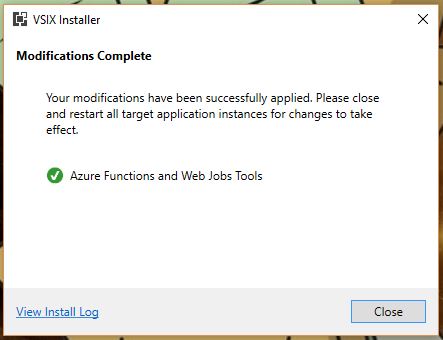

It was at zero percent for a long time, but that’s normal for Visual Studio. Its updates are never a quick thing. After it chugged along for a while, success!

Remember, I don’t actually need these components, but I was not going to let the VSIX installer win, and now I could raise my head in triumph. Plus, I can experience – for a brief moment anyway, if I know Visual Studio – the blissful Zen of the empty notifications window.

When it comes to a failed component update, I have seen others suggest uninstalling and reinstalling Visual Studio, clearing caches, even making changes to the registry! I have never needed to do any of that for any kind of installation or update hiccup. I don’t know why people think that, especially when a straight repair or modify, as shown here, always works. This is not an advanced technique or a hidden trick Microsoft doesn’t want you to know about; it’s a standard, Occam’s Razor approach that should always be the first line of defense and action for this kind of thing.

If this helps anyone out there avoid all those unnecessary machinations, then I’ve done my part.

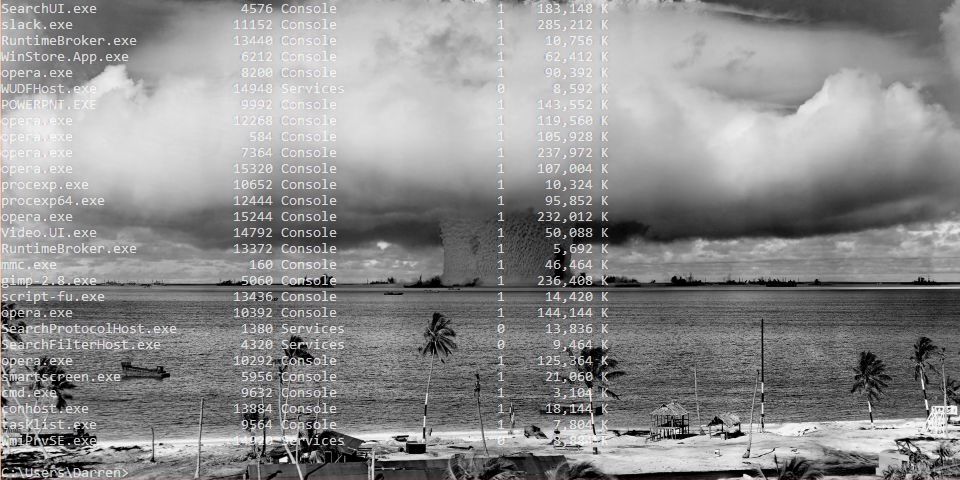

How to kill windows tasks, even those that just won’t die

I recently created a video that shows how to kill tasks via the command line, and if that doesn’t work, how to kill them via Process Explorer, and if that doesn’t work how to disable them using the Services window. It’s embedded just below, but if you’re not in a video mood, fear not! I have summed up its contents, although the video shows you the steps and comes complete with witty commentary.

https://www.youtube.com/watch?v=ZMLookOYQXg&t=4s

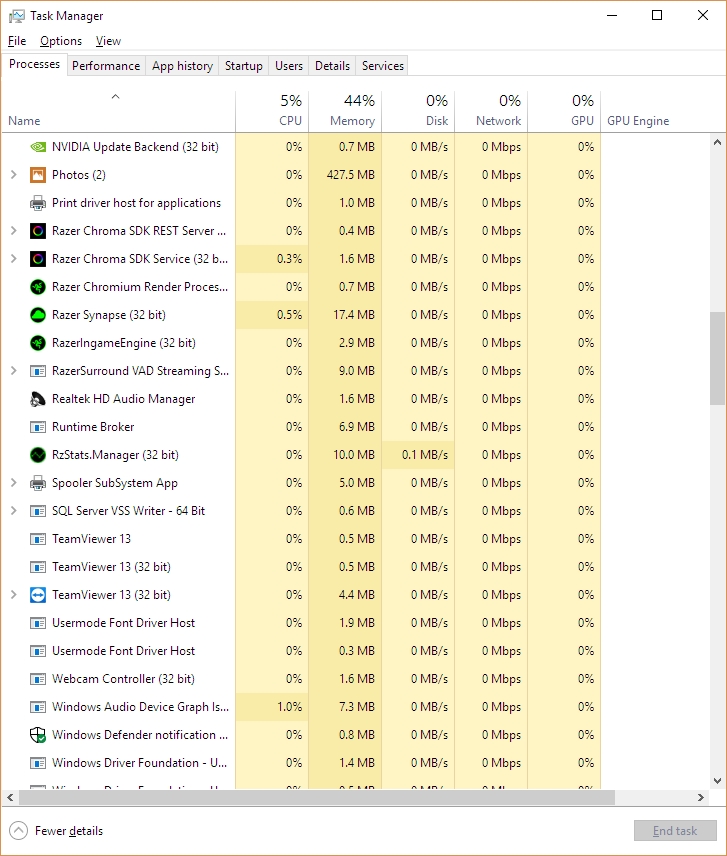

If you’re running Windows, any version of Windows, you know there are what seems like thousands of things going on in the background. All you have to do is bring up the Task Manager ([Ctrl]-[Shift]-[Esc], or the well-known [Ctrl]-[Alt]-[Delete] if you’re old-school and enjoy the extra steps) and you’ll come face-to-face with the process party happening inside your machine.

As you may also know, each of those running tasks requires some of your machine’s resources; sometimes a little, sometimes a lot, and you can see in Task Manager how much of each resource each process is using. If the process is something you don’t use or don’t need, then it’s not a bad idea to stop the process and recover whatever amount of system resources it’s hogging to itself, and it even turns out that while taking the above screenshot I happened to notice Windows’ Photo App, Movies & TV app, and Messaging app were taking up a lot, so away they went! Right-clicking brings up a menu that allows you to ‘End Task.’

But that’s also where an insidious problem lies. Sometimes, when you right click and select End Task, the task just doesn’t end. This can manifest in a couple of ways: The task may continue to run, mocking and taunting you to click End Task again, reveling in your failure to stop it. It might stop for a moment, only to reappear a second later, better, stronger, faster (not really better or stronger or faster, but it will reappear).

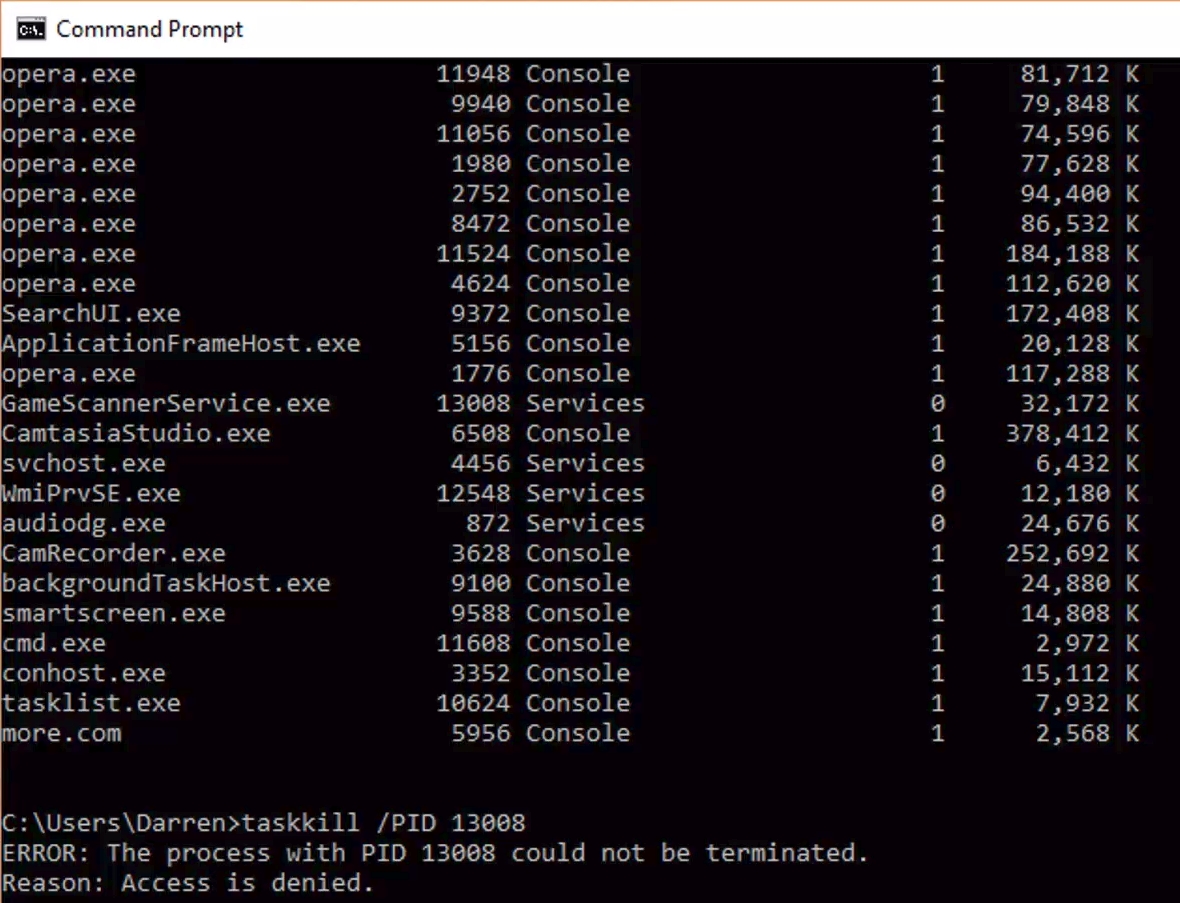

This even happens when using the command line to kill tasks. Normally this is done by listing the running tasks using tasklist, then, once the Process ID (PID) is known, using taskkill /PID actualPID, however even here that doesn’t guarantee a termination, with a similarly mocking response from your system.

Why does that happen? The main but not necessarily only reason is that it is actually part of a complex hierarchy of tasks that prevent it from being shut down. Either it is a child spawned from a parent process and upon being stopped, the parent just restarts it, or it’s a parent process that can’t be terminated because we can’t have orphaned tasks.

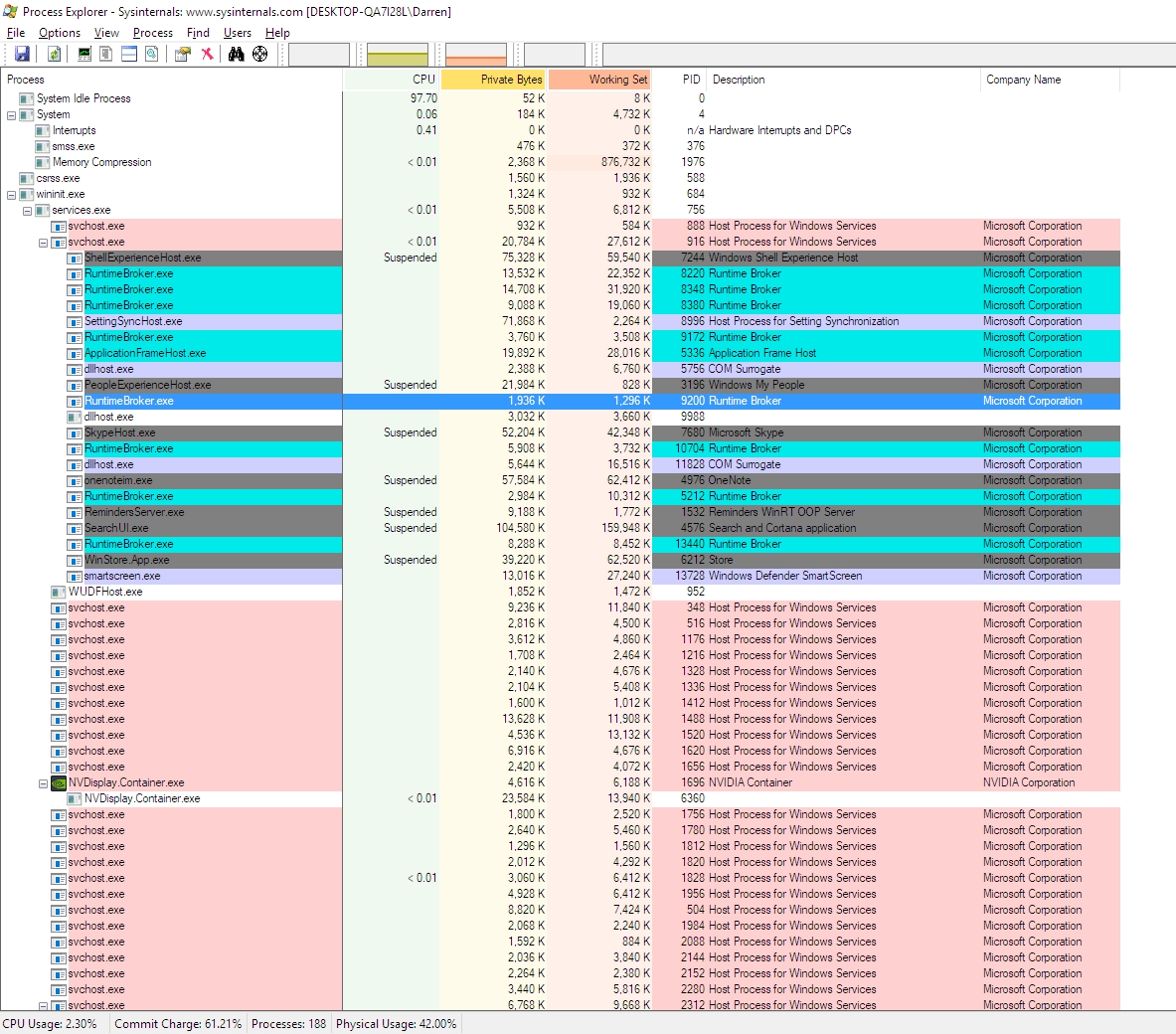

To determine which of these is the case, I am a big fan of Process Explorer, part of Microsoft’s SysInternals software suite that helps maintain and monitor Windows. If you click on the link, you can see in the left-hand sidebar the other packages available; They’re quite comprehensive.

Process explorer shows you everything that is going on, how many resources are being used by each process including specific and shared memory, the Process ID (PID), the name of the company that developed the software, it color codes by category, it’s a great program that shows a lot and really gives an idea of not just what is going on but how it all relates.

You can see that some processes are child processes and some are parent processes and whether you can or can’t kill a process is very dependent on the nature of that hierarchy. Trying to stop a task even here will prove futile as the hierarchy is the same. Interestingly, in Task Manager right clicking gives the option to End Task, while in Process Explorer it gives the option to Kill Task. Hmm. The deeper we go, the more violent we get.

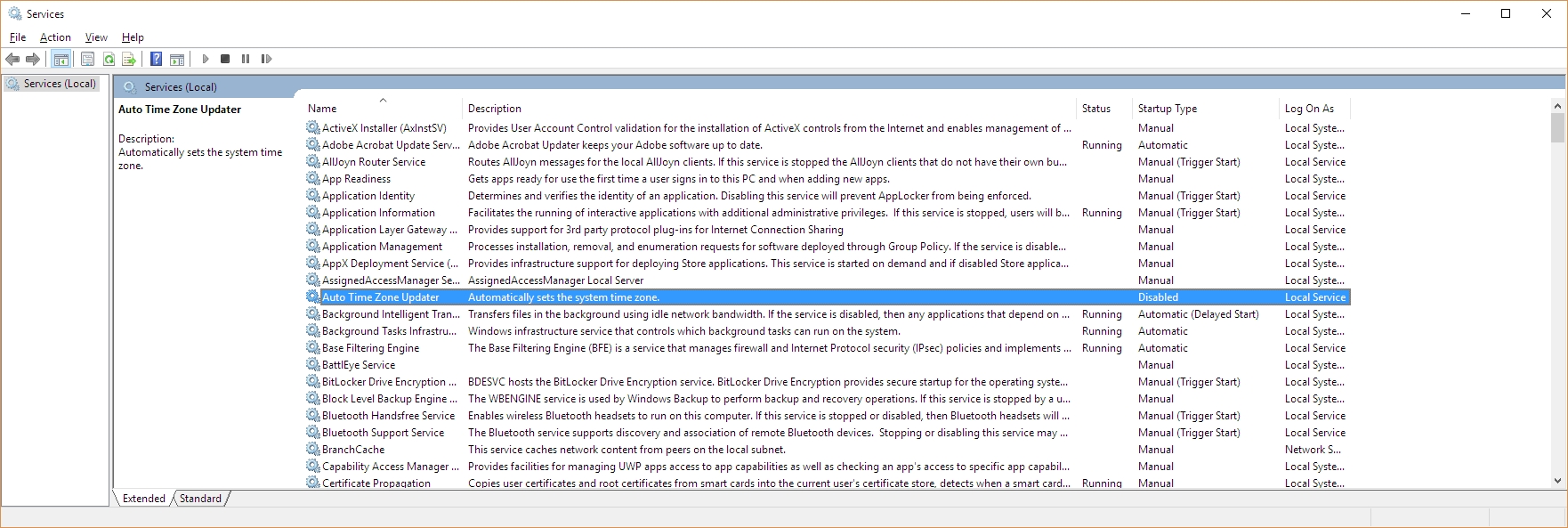

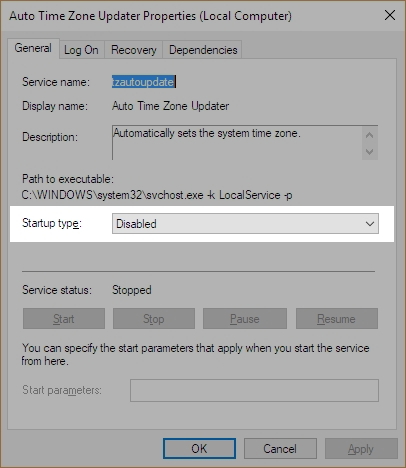

Ultimately, if you really want a process to not run, you should first make sure it’s not something you need. Google, even Bing, is your friend. If you are certain the process is just leeching resources, then you’ll have to go into services.msc, which you can run by typing it in to the search bar there by the start menu, and disable the service by right-clicking the service and selecting Properties, then Startup Type. Don’t set it to Automatic, obviously, or even Automatic Delayed Start, but also don’t set it to Manual, because then it will just start up again if the system decides it’s needed. Be sure to set it to Disabled.

That will stop the process from starting up, even if the system thinks it should. Remember, though, that if you *do* end up needing the service, you’ll have to go back into Services.msc and start it up yourself again, as this shuts it down completely. And I have to say it again: be careful about disabling processes. If you disable one that you need, or worse, the system needs, you may find your device acting very strangely, or in a worst-case scenario, software or even hardware not working at all. Even if you don’t disable anything, this is a good way and a good opportunity to learn what your system is actually doing.

Russian botnet master nabbed in Spain, extradited to US

This is a story that has been ongoing for some time. Pyotr Levashov, a well-known and well-established Russian cybercriminal who was arrested in April of last year (2017 if you’re reading this in the distant future – welcome alien overlords!) while vacationing in Spain, has finally been extradited to the U.S. Apparently cybercriminaling does pay well sometimes. The arrest was based on a formal U.S. Department of Justice indictment against him for, among other things, operating the Kelihos Botnet, a long-running, expansive, global botnet that bombarded the world with all kinds of spam for nonsense like get-rich-quick schemes and enhancement medications; if you’re interested, and you should be, you can read the DOJ press release about the indictment and the actual search warrant that allowed for their infiltration of the botnet.

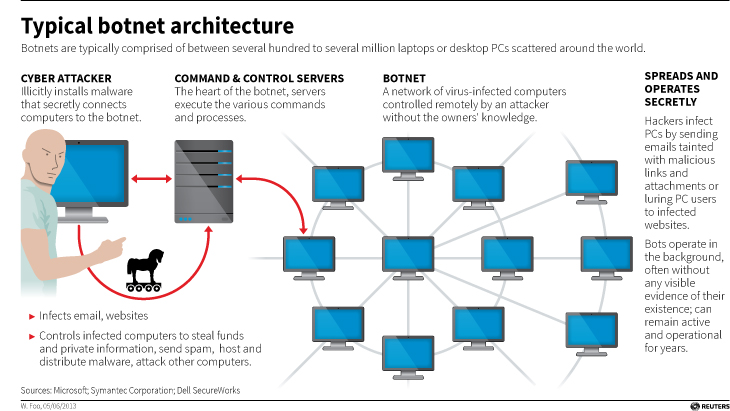

Before we continue, let’s talk about what a botnet is. When malware, or bad software (get it? Mal ware?), is surreptitiously installed on your machine, either through a drive-by attack in which it’s embedded in a Flash ad, or you click on a link or file from a rogue email, or one of many other attack vectors, it will use your machine to carry out tasks without your permission, involvement, or even knowledge. And just to be sure, those tasks it’s carrying out are bad. It can use your machine to send spam, participate in DDoS attacks, store harmful or illegal files, and many other unethical / criminal activities, all without you ever being privy to what’s going on. When that happens, your machine is what’s known as a zombie computer, or more commonly, a bot. Now, imagine hundreds of thousands of these infected machines all acting in unison, for a common goal or under a central control authority. That’s a botnet. Here’s an effective graphic from Reuters that illustrates the architecture of a botnet.

I wanted to embed an interactive map from Arbor Networks that shows real time attacks happening right now, and provides historical data, but their embed code which uses iframes doesn’t work on WordPress. I find it strange a security firm would still be supplying iframe embed codes, but who am I to judge? No matter; there are other sites that provide similar information using their own honeypot networks, such as Kaspersky’s real-time threat map and the well-known Norsecorp map. Actually, I had intended to use Norsecorp’s IPViking map, however it is now run under HP’s banner, although powered by Norse, and I simply couldn’t get it to work in any browser. Their map linked above works beautifully, though.

There are several interesting facets to this case: The first is, this guy has been around a long time and was one of the bad actors behind the Storm botnet that first manifested all the way back in 2007. That botnet was eventually dismantled by the combined efforts of Microsoft, malware firms, and the feds, a partnership and collaboration that continues to this day. We’ll come back to this particular botnet soon, because the architecture of these things is going to become important.

By soon, I mean right now! Another interesting aspect to this case is that the botnet was very sophisticated. It used a hybrid structure that is unusual for this kind of thing. Botnets are typically peer-to-peer, in which all the infected machines communicate with each other to coordinate and carry out their nefarious activities, or they use what’s known as a C&C, or Command and Control server, that oversees the whole thing and controls the botnet form a more centralized location. That allows better control and oversight of the bots.

Kelihos, however, was a hybrid, in which there was a C&C server, but there was also a peer-to-peer aspect as there was some autonomy in the architecture that allowed the bots to continuously update among themselves a list of secondary control servers to which they would report, and those would be directly overseen by the main C&C. This is in direct contrast to the Storm botnet mentioned earlier, which was pure peer to peer. A hybrid network also allows for rapid updates to, and distribution of, associated malware.

That leads to the next neat(?) thing about the botnet: It was aggressively and frequently updated. In fact, when a live sinkholing, in which the bots are redirected to to different targets that can then help track the bots or even deactivate them, took place at a 2013 RSA security conference, a new version of the botnet rapidly took its place which indicated that the creators were prepared for just such an emergency and had pre-planned a contingency.

And this was not just a spamming botnet. Along with pushing spam of both the email and desktop pop-up kind, it also stole bitcoin and targeted banks and other large industry outlets with industry specific malware that could rake in millions of dollars while running undetected. For botnet software, this had a wide range of functionalities, both general and specific, although for all it could do it was not hard to track.

The next interesting aspect of this case is Russia fought vigorously against Levashov’s extradition. Not by attempting to block it, but rather by filing an extradition request of their own based on crimes they say he committed in Russia itself. A smart move, regardless of whether the Russian charges are true or an attempt to protect one of their own, that is a clever way of approaching it. It didn’t work, ultimately, and Levashov is now in U.S. custody, but it was an interesting tactic to counter the original extradition request. Not only that, it has happened before.

A really interesting story all the way around, and I’m curious to see how it concludes. In the meantime, be careful, ensure your OS is up to date and fully patched, be sure you are running up-to-date anti-virus and anti-malware protection, try not to visit questionable sites, don’t activate or respond to emails from unknown sources, use an ad-blocker (uBlock Origin is my preferred choice, and I have no connection to them; purely my own opinion), and just generally practice safe computing.

OpenOffice Writer: Good, better, but not yet great

People are always looking for a free alternative to costly productivity software, and never has that been more true than with Microsoft’s Office Suite. While they now have their subscription-based, $99/year Office365 Software as a Service offering, it used to be that regular updates to Office could cost hundreds of dollars, especially if you were including Access in the package. People didn’t like the repeated substantial costs associated with new versions of software, and that was not a sentiment limited to Microsoft.

The thing is, while free options, substitutes, are often available, they are also often simply not as good as the software they’re attempting to replace. Consider GIMP (GNU Image Manipulation program). Intended to be a free option for those who don’t want to purchase Photoshop, as well as for those who would like to experiment with image manipulation and the like, it’s also much more cumbersome to use. Not that Photoshop is easy, but in terms of usability GIMP isn’t an ideal. Simply drawing a straight line is a process, dragging handles is awkward, finding the right tool dock can be confusing, and if you’re just experimenting with it to get a taste of what image manipulation software is like, that taste will be bitter. Even the name itself is difficult, with GIMP being an acronym for “GNU Image Manipulation Program,” and GNU itself being the awkward acronym “GNU’s Not Linux.” It used to be the General Image Manipulation Program, and I’m not actually sure when the name change took place.

Not that isn’t effective – it is. In fact, I often use it myself for the images on this very site that require some touchup, such as adding text or combining multiple images into one or adding illustrations to name a few, and I even have an academic license for Photoshop! Quick and dirty manipulations are easier with GIMP, but as a functional, full-featured piece of software it’s functionality, not so much usability, that drives the development of GIMP. For very simple things like batch resizing / converting, pixeling out info, or cropping, I just use the IrfanView image viewer, which is much more effective and easy to use for that type of thing. But not adding text. it’s terrible for that.

So why do I mention all of this? A friend who recently lost her job needed to get her resume updated and out to potential employers fast. However, as she doesn’t make boatloads of money she had an older laptop and no Microsoft Office installed or available to her. She was trying to edit said resume in a reader, not realizing it doesn’t work like that, and without having the finances to acquire Office, she asked me for help.

My first bit of advice was to use Zoho online, a remarkably feature-packed online office suite that deserves its own, dedicated post, so I will add a followup soon with screenshots, samples and impressions. The problem is, they don’t offer a locally-installed solution and she doesn’t have Internet access, so that solution was out. I then suggested OpenOffice, a free Office alternative that has been around for a long time, and that I hadn’t tried out in years and years. I guess I should refer to it by its proper name, Apache OpenOffice, as the original OpenOffice, originally developed by a company called StarDivision, which was acquired by Sun, which was acquired by Oracle, no longer exists. Did you follow all that? It’s like a software soap opera. Anyway, OpenOffice was turned over to the Apache Foundation, which is dedicated to community-built open-sourced projects, and it has a staggering number of them. Their web server is, and has been for a long time, the most widely-used web-server on earth.

When I last used OpenOffice, long before it was taken over by the Apache Foundation, it was bad. This would have been back in the late 90s, and even then it couldn’t hold a candle to Office. The icons were unintuitive, the functionality limited, the compatibility wonky, it just wasn’t a good alternative. I’m glad to say that after twenty years it’s better than it was, it’s good, but it’s still not great and has one glaring flaw that really holds it back.

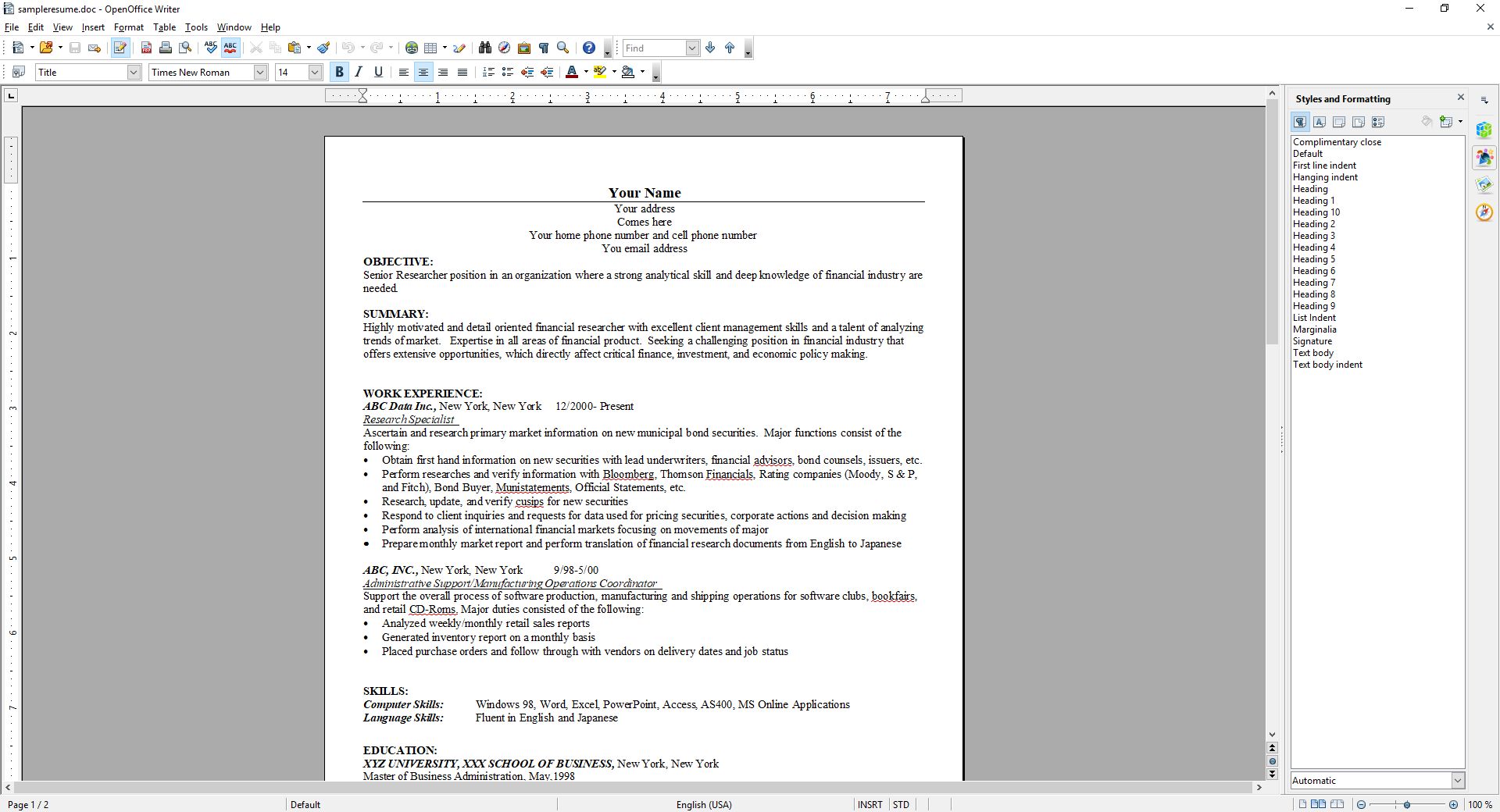

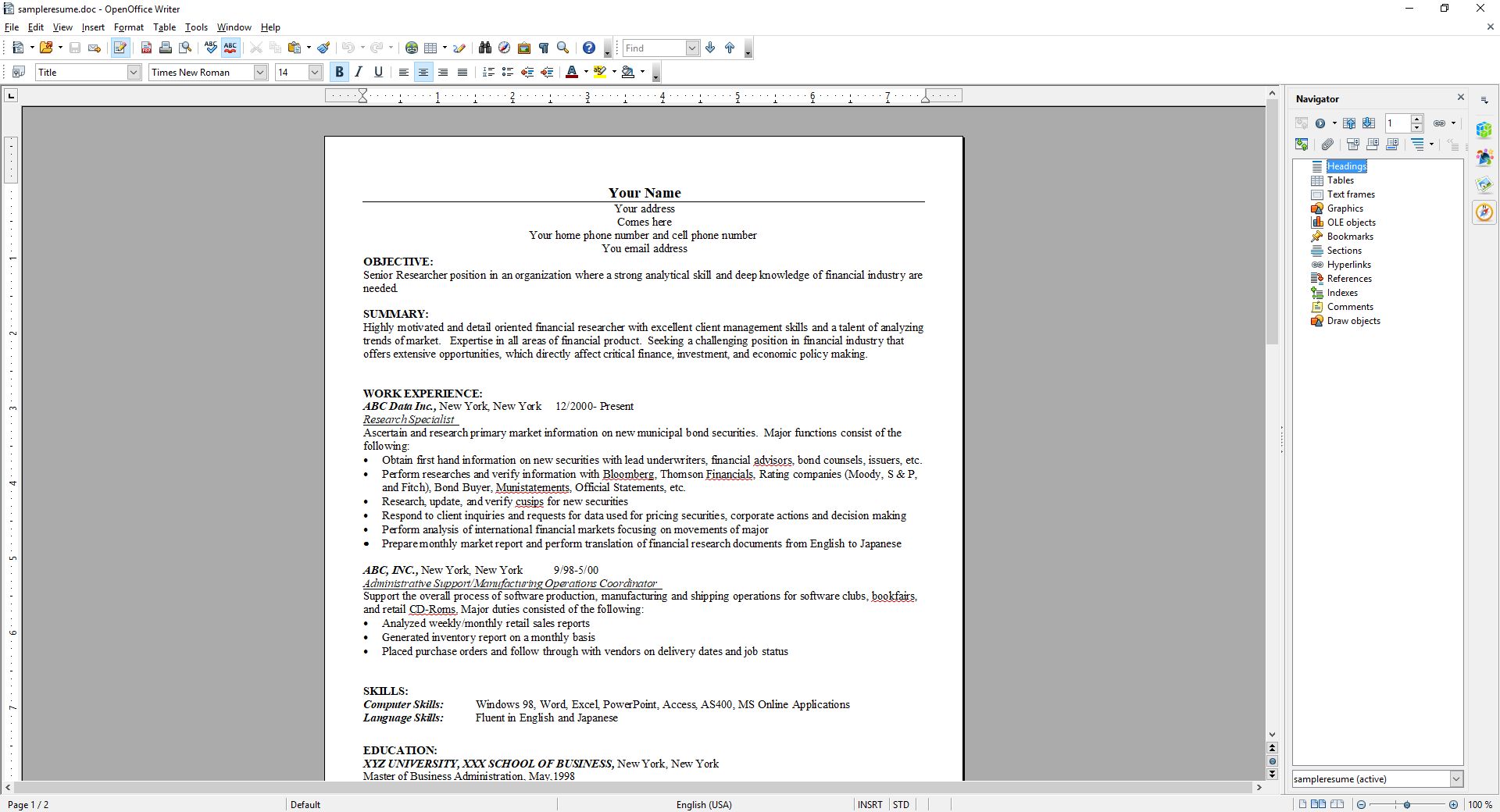

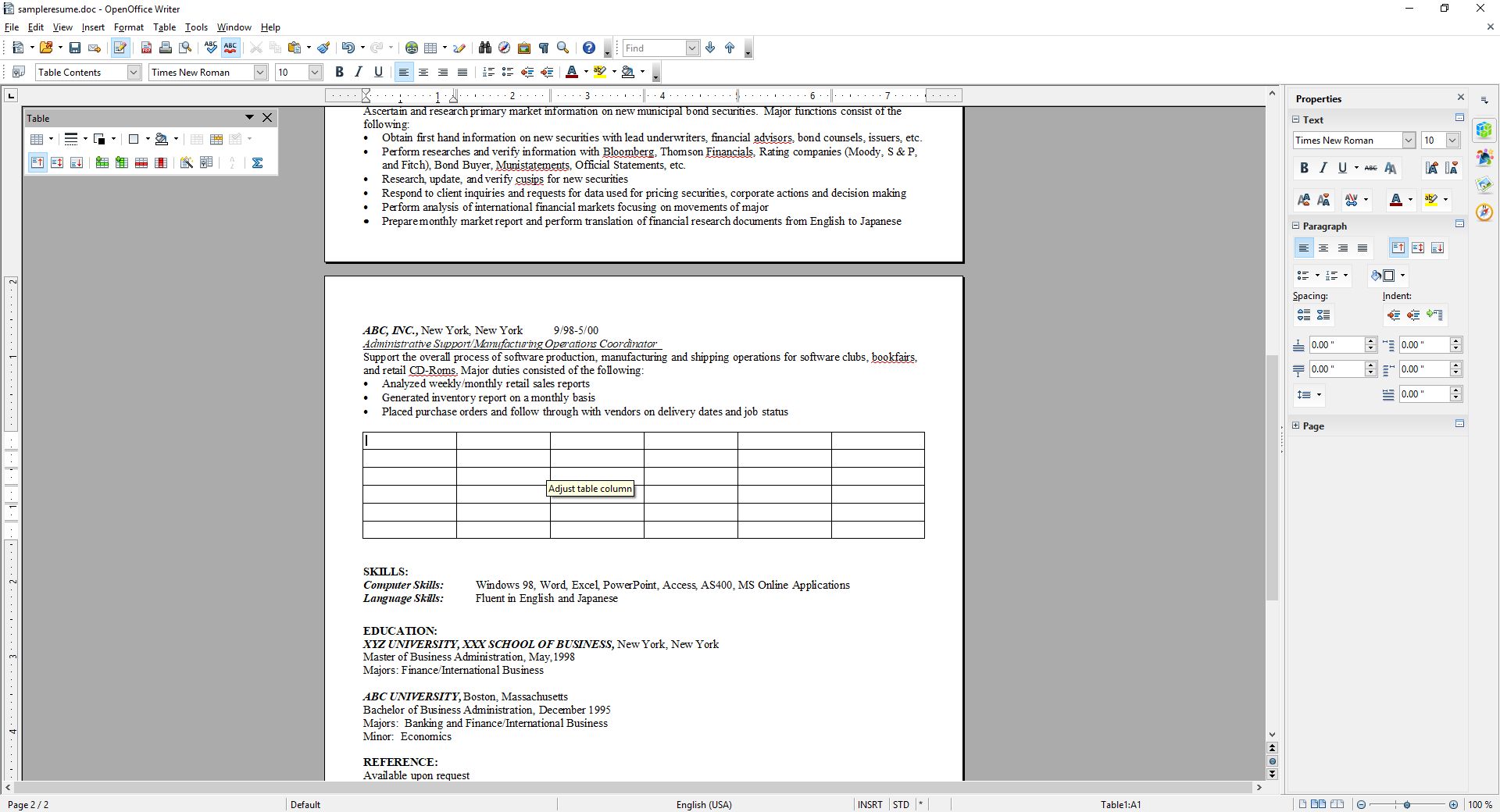

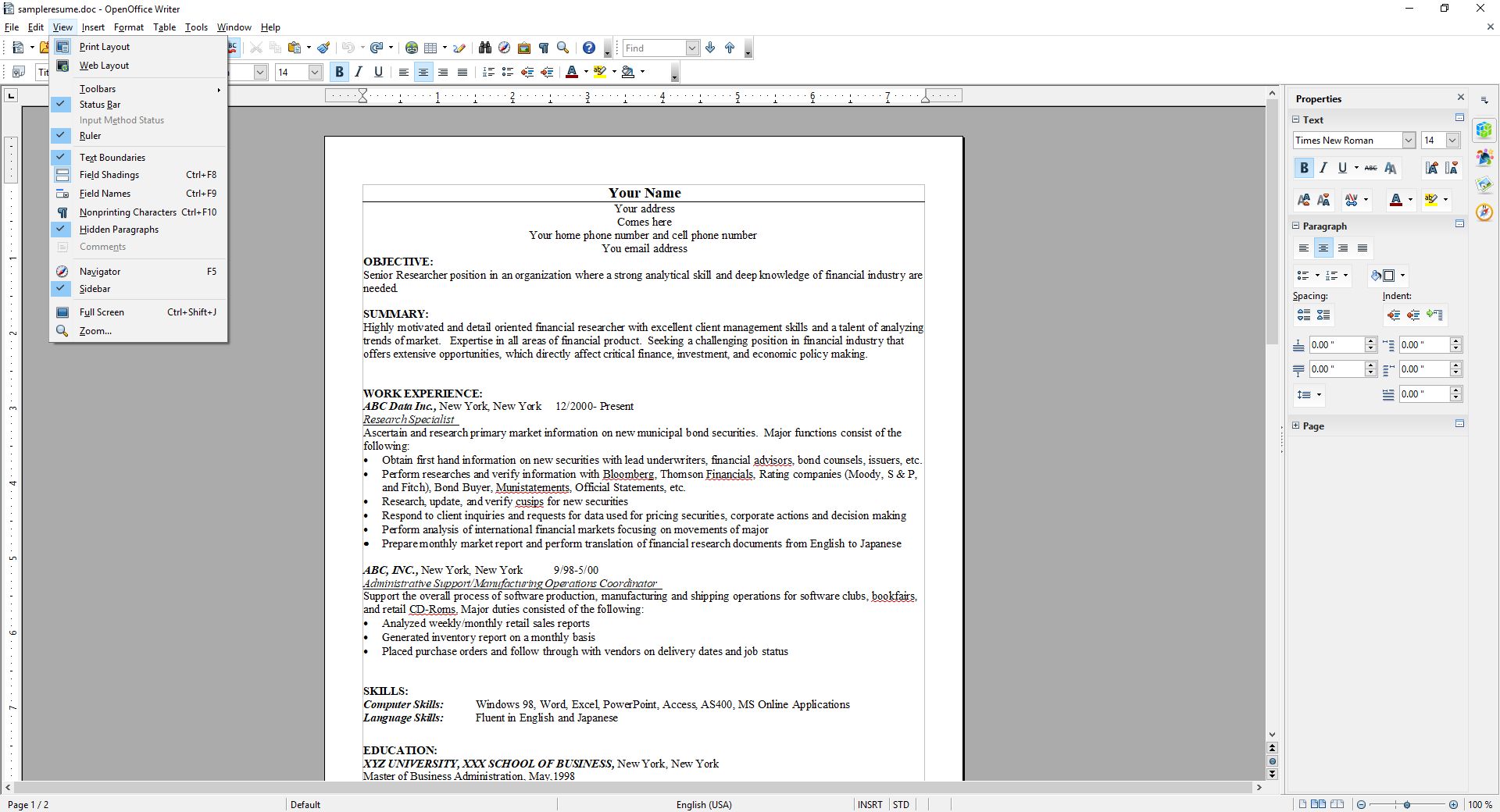

First, the good: The interface is much closer to what one would expect from a standard word processor. You can see in the image below the standard toolbar, which is also replicated in the ‘Properties’ dialog located in the sidebar. While the icons are much better, meaning much more standardized, the duplication of them across the top and side can cause issues. Functional and graphical replication is poor interface design, one you often see on webpages. And while the icons are generally much better, they’re not completely standardized. The top menu, however, uses the standard “File | Edit | View | etc.” menu with expected submenus under each entry (see ‘text boundaries’ image at end of post). You can also see in the below image(s) the icon for ‘Properties,’ one of four down the far right side, is a green and blue cube, bespoke for this program, while underneath it are the icons for ‘Styles,’ ‘Gallery,’ and ‘Navigator,’ which is ultimately used to move through the document itself via various elements. All of these are unique in function and design to OpenOffice.

It also has an extensive array of settings that covers every aspect of the program you could hope for, even how you allocate memory and VBA integration.

Using a sample resume I downloaded from the Internet, I tested how well it handled inserting elements, specifically an image and a table. This is something that can even trip up Word itself, but I am happy to say it handled both swimmingly, accurately integrating, aligning and formatting both with ease. The text adapted to any changes in size or position easily and the results were always pleasing. It also pops up a task-relevant toolbar to help further with fine tuning or further formatting the element being inserted.

Again, after insertion both of them were easy to adjust and format, and the rest of the document was very responsive to those changes. While this post doesn’t cover all you can do with Writer (you can imagine how long a post covering everything you can do in Word would be, and it’s the same here), you can get an idea from the images, specifically the ‘Navigator’ image included above.

I’m also not a big fan of the text boundaries that are shown by default; they make the whole document look as though it is too small on the page. I get that they are trying to illustrate the margins as well as provide a quick and dirty print preview, but ultimately I find them distracting. Fortunately, they can be turned off completely (which makes it look more like Word, and the familiarity is welcome).

The two big problems with OpenOffice are, unfortunately, major issues that prevent me from recommending it completely, especially as there are other programs and online options that don’t have these concerns.

The first is an issue primarily if you are importing a .docx (Word 2007 – present) file to work on a document. If you create your document from scratch in OpenOffice, it works quite well and is feature rich enough to complete even if you are doing fancy stuff. On the other hand, if you’re importing in Word’s latest format, forget it – processing hidden Word formatting codes is not Writer’s forte. Writer will destroy enough of the formating that fixing it is not worth the effort, and copy – pasting without formatting and re-formatting is likely, but not always, the only viable option. Even so, the common outcome is that no amount of tweaking will right the formatting ship: You can recenter and re-format and unbullet and rebullet and tab all over the place all you like, but it will never get back to the way it was; it will simply not play nice with undoing damage from an import.

Building on that is the most glaring issue of all: Writer simply doesn’t recognize the .docx extension. You can’t save using that extension, only .doc, a format that hasn’t been used for about ten years. Writer has its own format, .odt, that is unique enough that trying to go the other way, and opening a Writer file in Word will also yield unfortunate formatting issues. Other alternatives like the aforementioned Zoho handle them easily, but not OpenOffice. In fact, when creating a new document in Writer, it asks if you want to create a new Text document, which in Windows or Mac has a specific meaning, and it’s not a fully formatted, functional, professional document.

This post only covered OpenOffice’s word processor, Writer, although the other applications exhibit similar behaviors and limitations. They work well if you are working solely within OpenOffice, but not if importing or exporting.

I want to see OpenOffice succeed, especially under the Apache umbrella, but as of now I simply can’t recommend it as it is. That’s distressing, since I haven’t been able to ever recommend it over the last twenty years, although it’s always been very, very close. It works well on its own and with its sister programs, and has incredible potential, but the fact is it doesn’t play well with others, and until it does it simply won’t be a viable alternative.

The new oculus home and store experience

Facebook-owned Oculus released their Rift Core 2.0 software a couple of days ago, completely revamping the out-of-app experience users have when they don their Rift headset. It’s a vastly improved experience over the original Oculus home (which wasn’t all that bad, to be honest); much more feature-rich, streamlined, and user-friendly, however it is still clearly in beta, which is a good thing because it is also still, bafflingly, missing some foundational functionality. According to the Oculus developer blog, there is much more coming and I am very much looking forward to it.

I have made a video, embedded at the end of this post, however that was not without some strange difficulty. You see, when you are in a VR app, the app is automatically mirrored to a window on the desktop, but not when you are in Home or the store. For reasons we may never know, the developers made that window a hidden window, and it required a third-party app, cleverly named HomeUnhider, to make it visible. I would have used the more popular OculusMirror.exe, supplied with the Oculus software itself, however unlike everyone else on earth, my Oculus program folder was empty, with none of the software that was supposed to be installed along with the core experience, and it’s not available for download.

“No problem,” I thought confidently, “I’ll just use HomeUnhider.” Great idea, except HomeUnhider doesn’t work with the new beta experience, giving an error that reads ‘Oculus Home not found.’ It required a complete uninstall and reinstall of the Oculus software, but I did then get the mirror program that allowed me to capture the video (for those interested, the complete path is C:\Program Files\Oculus\Support\oculus-diagnostics\OculusMirror.exe). Camtasia then proceeded to cut off the bottom bit of the video so you can’t see the dashboard as much as I’d hoped. It’s also a weirdly low-res video, which is strange as I use Camtasia a lot and don’t normally have that issue. Here’s a pic of the original Oculus home, lifted from the Windows Central forums, to set a baseline.

As you can see, it’s not bad. It has a nifty, pseudo-futuristic-while-simultaneously-rustic vibe going on, and the home and store functionality is combined into a single interface. You can see yourself, your friends (yeah, right), highlights from the store, and recently accessed apps. The image is actually an older version of home; it had been upgraded from the design you see in the image above, with additional navigation, categories, and so on. It looked generally the same, but with some additional functionality. Of course, you could look around your home if you just wanted to chill, as the kids say.

In the just released beta, however, it has been completely revamped. Your house now sits on what appears to be the cliffs of a…Mediterranean, perhaps, or maybe Spanish inlet, part of a larger coastal village, with your balcony looking out over the water, which also happens to have some pirate ships. Can’t argue with the view!

There are also some activities you can do, such as shoot a bow and arrow at nothing in particular, shoot what appears to be a virtual incarnation of the Sega Master System Light Phaser (which could be dangerous in the wrong hands) at similarly 8-bit targets, or lob golf balls into the water. For being such simple activities, they’re oddly fun. You can navigate around your house, something you couldn’t do before, and you can now do some limited decorating. By selecting different patterns you can change the look of the ceiling and walls, and other accouterments can be placed around as well. The carrot here is that by playing games and using apps, or simply spending time in Home, you’ll earn additional items and decorations that you can use to further spice up your space. And don’t worry, there are no lootboxes here.

I suspect that there will eventually be many more ‘homes’ from which one may choose: On the developer blog linked earlier, there is what appears to be a house in outer space as opposed to the seaside location it’s in currently, and my guess is there will be more than that. The blog states they’ll be rolling out content over the course of the next year, and I suspect further on past that. Hey, Sony’s Playstation Home may not have been a hit, but the homes you could buy were spectacular, and it never came out of beta! I can only imagine what it will be like if we can get homes like that in VR – I’d buy every single one. They even had one that was completely underwater with whales and whatnot swimming outside massive windows…Now I’m nostalgic and I can’t find a single picture of that house, so instead here’s a picture of an underwater, Bioshock-themed apartment you could get. Still pretty impressive, and apparently there’s some weird version of Playstation Home that still exists on the PS4.

GLORIOUS UPDATE: It turns out the environments are already available! I wasn’t aware you could scroll through decoration options within categories, however I began to suspect something was up earlier today when it said I had 78 objects but was only showing a few. After figuring out how to scroll, guess what appeared! That’s right: A space environment, and my new favorite, a city environment called ‘Vertigo.’ I made an additional video just to show them. They’re very impressive, and now I can’t wait to see the others that I’m certain will be available in the future – fingers crossed for an underwater theme!

So back to Oculus – there is the new dashboard, which I think is a huge, and much needed, improvement. It now curves around you like a futuristic control panel, offering access to settings, recently used apps, some status info, the store, and social info. It’s easier to use, especially if you’re standing up; using it while sitting requires you do some contortions with your wrists to get the pointers in the proper place. There is also a Desktop button that mirrors your monitor right there in VR, and if you have multiple monitors it will ask which one you’d like to see. The insanely nifty thing about that is that you can pin programs to the curved dash just like you can with the Windows or Mac taskbar, and even pull windows off of your desktop and pin them right in the air in your virtual house! They’ll follow you around – you can watch YouTube videos, play a game, browse the web, all as you mosey through your virtual environment. It’s an incredibly useful feature, however you can’t pin them, say, to the wall like a picture, which I think would push it over the edge into unparalleled awesomeness. Rather, they hover right next to you, although they can even overlay over an app you’re using, so you never have to be away from that admittedly very important video that finally proved the existence of life on another planet.

The store has been separated out as its own location, designed as a post-modern, I don’t know, office lobby? There’s a very natural theme to it with an Oculus-branded waterfall, wooden curved steps on either side of the round room, metal balls rolling along tracks under a glass floor, and you can even see silhouettes wandering around behind the upstairs windows. Oddly, and at the same time pretty awesomely, if you turn around you’ll see a very futuristic, yet also immersion-ruining unanimated cityscape. No blinking lights in windows, animated billboards, blinking stars, nothing. But it is an interesting contrast between what’s in front of you and what’s behind, one you can see below.

The store functionality is generally the same as it was before, which is a problem. They have finally added video previews of apps you’re viewing, something the Gear, the mobile version of the Rift, has had forever – the fact it’s been added to Rift now is just playing catch-up. Additionally, although it’s not an addition at all, it’s a maddening omission, there is still no search function, which is as basic a usability function as there is, especially when the list of apps can, in some categories, go for 100 pages! While apps are normally listed six at a time, their ‘Gallery’ titles, which are described only with the very vague ‘A broad, less-filtered collection from VR creators,’ are for some reason listed three at a time, and there a hundreds of them! I also have no idea what ‘less-filtered’ means, and when I clicked on ‘Learn More,’ Oculus crashed and wouldn’t restart without a reboot or taskkill because its background process simply wouldn’t quit, even from TaskManager. Anyway, you can sort, but you may still have to scroll through pages and pages and pages and pages of apps to get to the one you want.

There is also no browser, something its little brother the Gear has had for a good while now. It’s very strange; these improvements to Home and new environments for Home and Store are great, but it still lags behind the version that you use by plugging in a phone. The Gear version of home also has voice search, and other things like events, highlighted videos and better social integration, something that even I, with no friends on this or any other game platform, can see is much better on the Gear. That the Rift still lacks the basic functionality that its phone-based counterpart has enjoyed for so long is unforgivable. I’m overjoyed at this new Rift experience, and I have very high hopes, not only for its continued future development and what that will bring us, but also that it can simply be brought on par with what you would expect would be its much less capable sibling.

It’s important to also add that regardless of my complaints and concerns, Oculus is light years ahead of Valve / HTC and their Vive in terms of the interface. The Vive interface, a Frankenstein-like mashup of three, maybe four, maybe as many as seven separate interfaces (it’s hard to tell, it’s so badly designed), is overpoweringly difficult, cumbersome and unintuitive to use. So awful is the Vive interface, and impossible to navigate, that it may be, and I say this in complete seriousness and without hyperbole, the single worst interface I have ever used. In my line of work I use a lot of terrible interfaces, so Vive has really accomplished something here. Not only that, Steam VR incorporates beautifully into Oculus, so there’s no need to use the Vive at all, at least not in my household.

Below are two videos from Oculus, one highlighting the new Home and its potential options for customization, the other introducing the new interface which they call ‘Dash.’ The third video is the one I took on my own Rift in Home and the store.

A trip to the computer history museum

As a computer historian, I have – of course – always been a fan of the Computer History Museum in Mountain View, California. It’s a remarkable, amazing place, where you can see displays relating to things we are already familiar with: Things such as the evolution of the Internet and hypertext, a small display on gaming, as well as a slew of hardware, much of which I actually used at one point or another (Hello SGI workstation!).

There are also more eclectic displays, such as the one regarding the evolution of computer graphics and the hardware that created and displayed it (one more time: Hello SGI workstation!). Even the well-known Utah teapot that became a standard for computer graphics and animation has its own display.

A relevant side-note I should mention here: I’ve mentioned Silicon Graphics workstations twice now, and as fate would have it, The Computer History Museum itself is housed in the old SGI building. See how things come around?

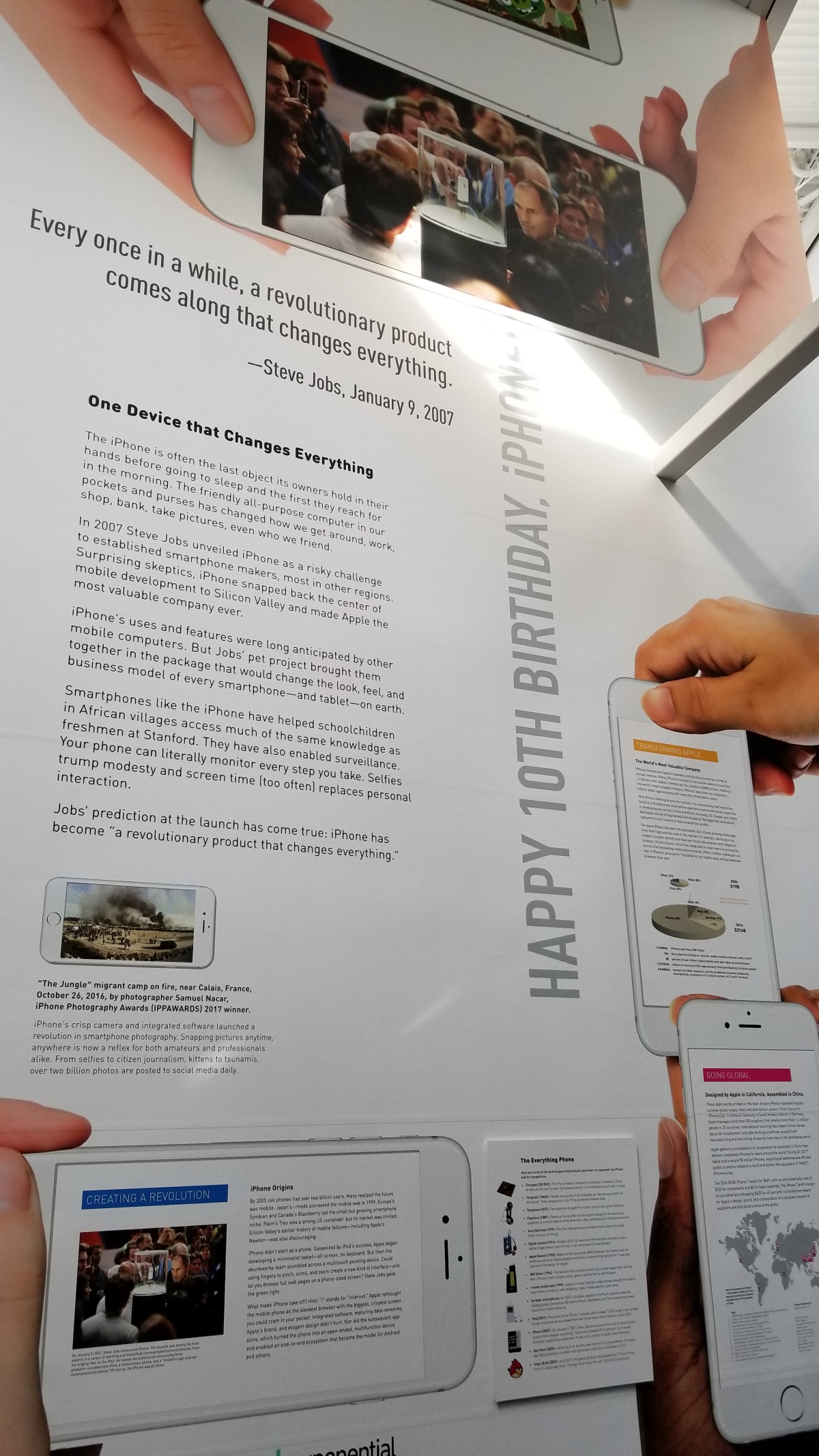

Right when you walk in , there is a huge display about the iPhone, and I was very impressed to see how fair it was; it sung the praises of it, while at the same time presenting some controversies surrounding it in a very balanced an forthright way. Kudos to them for that, I was impressed.

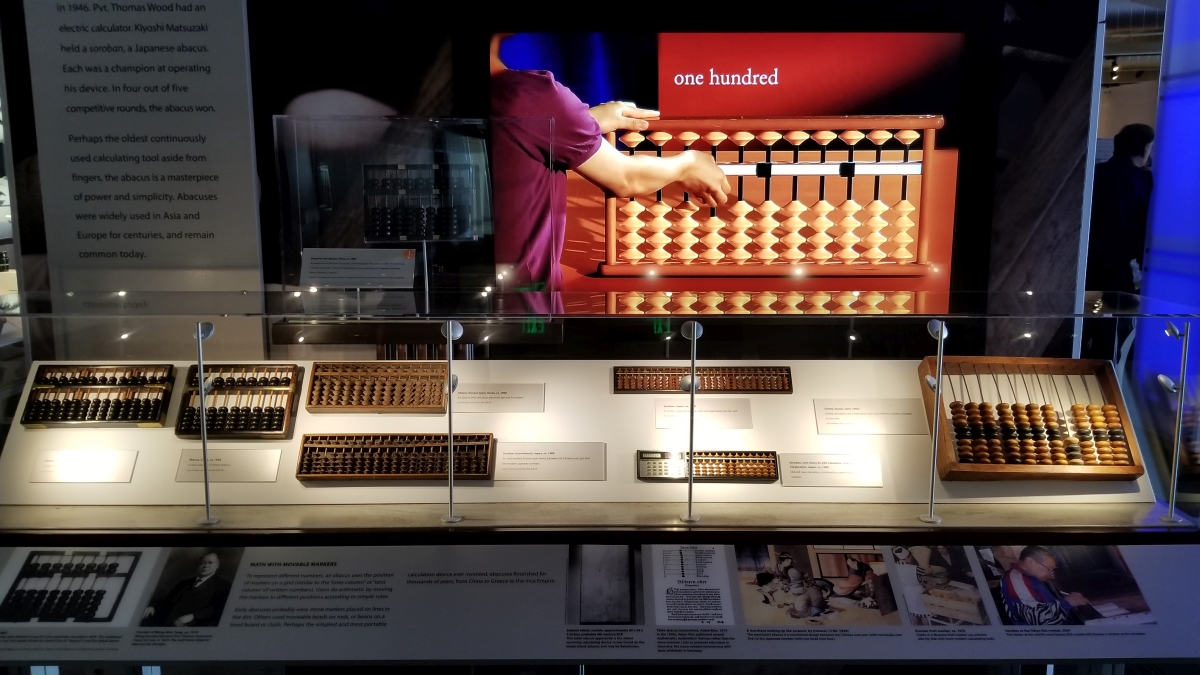

Its most interesting displays are, at least in my humble yet nonetheless correct opinion, the ones dealing with the real, wayback history, including things like an extensive display about the abacus, slide rule, and less-known analog computing tools such as Napier’s Bones, and there are more on offer. To the credit of those who developed and used these tools, they were not easy to use, and there are directions on how they worked for those who wish to give them a try.

Soon after your time in that room, you are then introduced to one of the most significant events in computing history, and one students in my Ubiquitous Computing course spend a whole week learning about: the creation of the Hollerith Tabulating Machine, developed by Herman Hollerith to compute data for the 1890 census. It was a huge success, both in terms of its function and design, and formed the foundation of what would later become IBM.

Soon after this exhibit come the computers of the 40s, 50s, and 60s, ones mainly used by governments and universities, and that often took up rooms of space thanks to their prodigious use of vacuum tubes. There were many, and there are many on display, some from companies well known and some from one-shot attempts. Below are pictures of the IBM 1403 mainframe, including tape drives and card reader/sorter, as well as what I feel is a pretty nifty image of the Johnniac tube array. Of course, the highlight of the display is their UNIVAC, to which mere photos could never do justice.

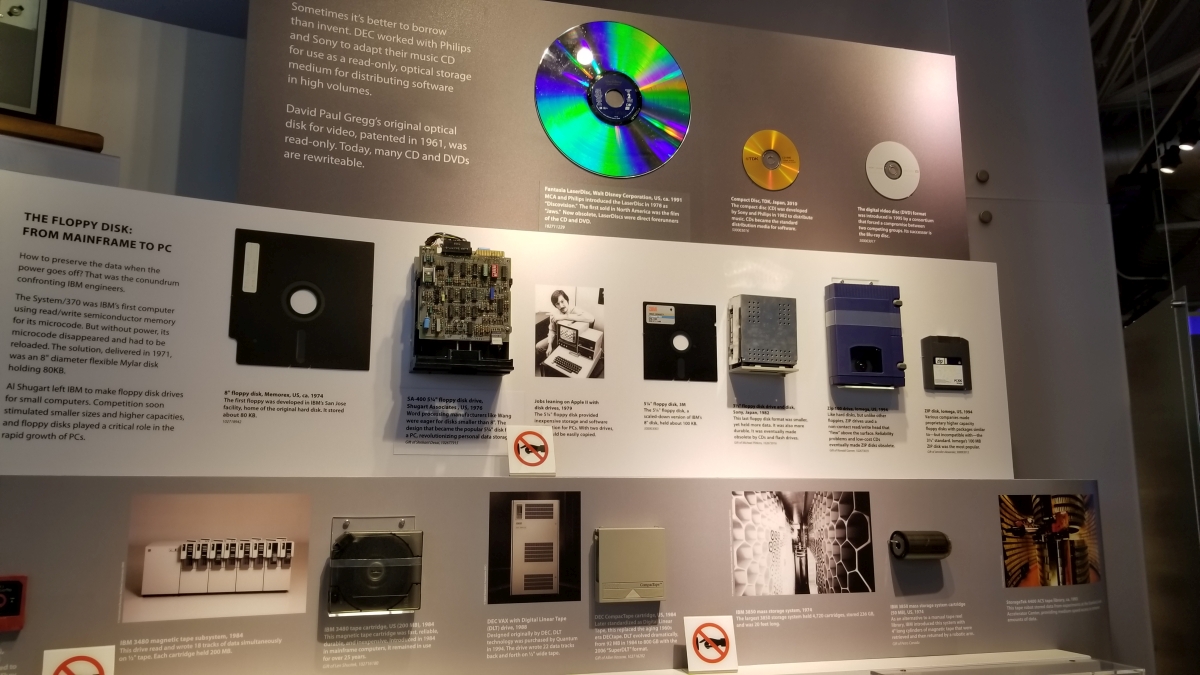

Another thing my Ubiquitous Computing (or Internet of Things, if we want to be familiar) students learn about is the evolution of storage, and the CHM doesn’t disappoint here either. Whether wire-wrapped core memory, or magnetic versus optical storage, the museum presents a very thorough look at how storage of all types has evolved from the very beginning.

One thing I found disappointing about the museum is their anemic display on gaming. It’s as cursory as it can get, with very few displays and nothing about the real history of the industry, something we spend weeks talking about in my game development course. Nothing about Adventure, an absolutely groundbreaking game that my students have to play and write a paper on, nothing about the defection of Atari employees because of atrocious company management, with said employees then forming Activision, the first third-party publisher that completely disrupted how games were made. It’s a minuscule display that fails gloriously at really discussing the history of gaming, and instead simply presents what appear to be random artifacts. It didn’t even warrant a picture.

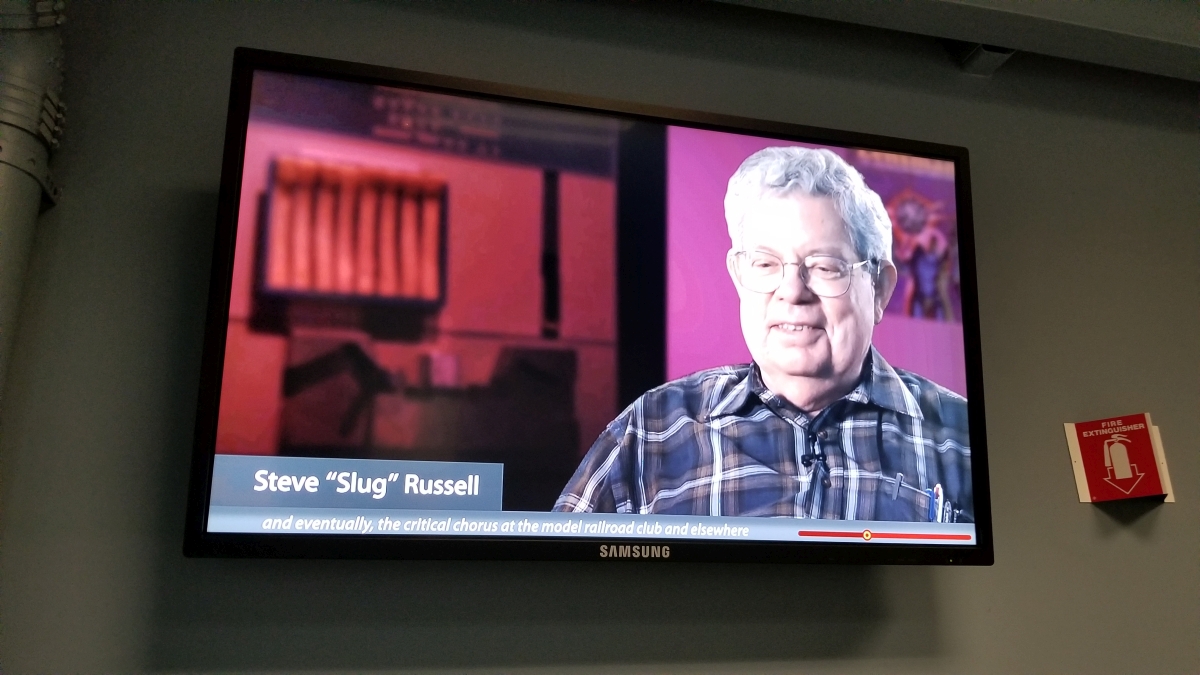

That being said, they do have a fantastic display of a PDP-1, the machine on which the very first computer game, Spacewar!, was developed. Additionally, they have a repeating video of Steve Russell, who developed the original, playing to educate visitors about how significant this was. Of course, if you took my class, you would know that he developed the base game, but others added additional features, such as a star map based on the actual sky, gravity, additional controls, and so on. It’s humbling to see, and since back then computer companies sold hardware while giving software away for free, the game garnered a lot of attention as PDP mainframes were, comparatively, used a lot by people who would be interested in something like that (about fifty were made, but they all had Spacewar!). Also, just to clear up any potential confusion, the exclamation point in Spacewar! is actually part of the title. If you’re interested in giving it a try, you can play a faithful HTML5 version at this link.

The museum has a ton of exhibits, from the Altair that Bill Gates wrote his company’s first program for, to the first Apple computer signed by Steve Wozniak, to consumer robots over the years, to a Commodore Pet (the computer on which I first learned programming), even an Enigma machine! If you’re interested in the history of technology and computing, it really is a fascinating place, covering the earliest attempts at computing to the future and beyond. From the first transistor to the Xerox Alto with the first GUI to the Cray mainframe and a ton of other great stuff, have a look at the pictures in the album below and see some of the fascinating displays on offer.

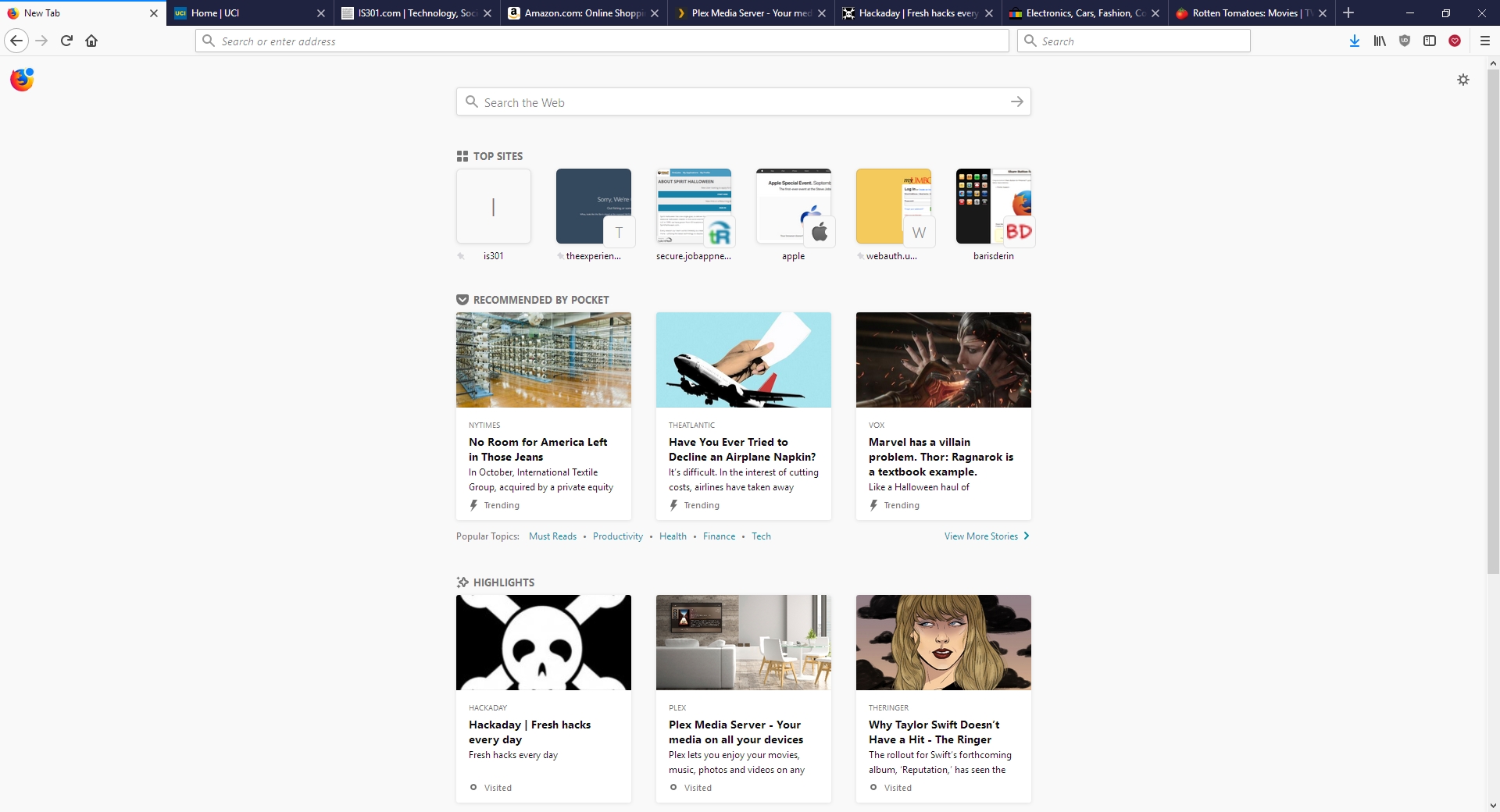

The new Firefox browser seems pretty good so far

I’m an Opera guy. Not an ‘opera’ guy, although I have nothing against that particular type of theater, but an Opera browser guy. Many years ago I was a Mozilla Firefox guy, especially as they rose from the once-great Netscape Navigator, however for many years now Opera has been the Samsung Galaxy to Firefox’s iPhone; in other words, Opera always had features that Firefox offered much later or didn’t have at all except through add-ons, and was simply faster, more responsive, more stable, and easier to use. Not only that, Firefox has been infamous for its memory leaks, a problem it was never able to solve (Chrome, too, let’s not forget about Chrome). I should also mention in the issue of fairness that plugins could exacerbate the problem, however the base browser always struggled with the issue as well.

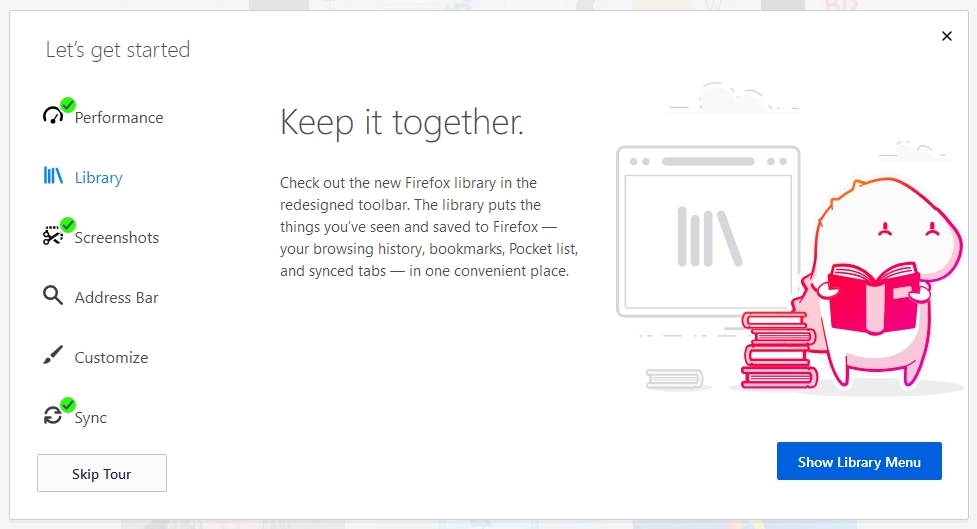

Now, however, Mozilla has released the latest version of its Firefox browser, named Firefox Quantum, and it is the first major update for the browser in almost thirteen years; you can read the blog entry straight from the horse’s mouth here. They claim it’s faster, better, has more features, is better able to manage resources, is compatible with new and emerging web technologies, and has many options for customization and configuration. It’s been redeveloped from the ground up, and it shows.

I gave it a run to see how it is, and it certainly does appear to be better. Very fast, very responsive, clean interface whose elements can be dragged-and-dropped to rearrange them anyway you like, which is very nice. I started up some test sites to see how they loaded, including UCI’s homepage, IS301.com, Amazon, Plex’s homepage as well as my Plex home server, Hackaday.com, eBay, and Rotten Tomatoes. I also left up the default start page.

The tabs loaded quickly, although plex.tv and this site took longer than expected, however while comparing response times with Opera, they loaded slowly there as well so the problem was obviously server-side and not an issue with the browser itself; testing them later resulted in equally speedy load times. That wasn’t the case with actually playing videos using Plex, though – Firefox took much, much longer to buffer than did Opera, almost three times as long; I was unable to discern why but the issue was repeatable and consistent. Barring that anomaly, everything else was very snappy and without hesitation. One thing I should add about measuring response times: It is very difficult to do client-side, and is often done via what is known as a stopwatch test, doable in code or with an actual stopwatch. Needless to say, I find those both very unreliable and so went with my own observation to get a general sense of how Opera and the new Firefox compared.

Firefox also has many new features, including deeper integration of Pocket, a personal web-content aggregator that Mozilla bought and integrated a while ago. I have never used it, but can understand how it could be quite beneficial for some. It also has an excellent snapshot feature that allows you to take a screenshot of all or part of a page and save it locally or share it to their cloud service. I don’t know why someone would use the latter, but the former is something I use quite a bit with Opera, and as we will see Firefox does it much better. There is also the aforementioned interface configurations, the library which is just a collection of your browsing history and bookmarks, syncing across devices, and more. As the header image shows, they even have a new icon!

Of course, that’s where the important issue comes up. The fact is, while the new Firefox browser appears to be much faster, more responsive, more stable, everything I said about Opera earlier, what that essentially does is bring it on par with Opera, except in the case of Firefox’s better screenshot functionality which I will discuss later in the post. I tested each page I loaded in Firefox against the same page as it loads in Opera (with three times as many tabs open in Opera, no less) and the performance was the same. Opera wasn’t better as it had been in the past, it was simply the same. That’s a comment on both Firefox and Opera; how well Opera has been in the past, and the catching up Firefox had to do, and did quite effectively and impressively, to reach it.

As mentioned earlier, there is one big difference in which Firefox easily takes the lead, and regarding a feature I use often – the screenshot feature. Both Opera and Firefox have had this feature for some time, and as stated I use it frequently, although now Firefox’s implementation is much better (MUCH better) – it can identify sections of a screen, such as a headline or picture or other element, as you move your mouse, and snip that out, while Opera requires you to adjust a selection box. Additionally, Firefox has the neat ability to capture a whole webpage as a snapshot, even if parts of it are not visible in your browser – the image below was taken in Firefox even though the entire footer and about 20 percent of the header were not visible when I did. That is a great feature, one that surpasses Opera’s full-screen (note: not full-page) capture, and might be the clincher for some; it almost is for me.

Opera, on the other hand, allows for screencaps to be marked up in a very limited fashion right in the capture window, something Firefox does not, however this is less of an issue for me as I usually use GIMP if I need to make extensive edits, and IrfanView if I need to make any size adjustments, batch actions, or simple crops. Good thing, too, since all Opera allows one to do is add some arrows or stickers; Regardless of what Opera says, it’s not actually editing at all. In terms of flexibility, Firefox is the clear winner in this category.

For these reasons, I can and will whole-heartedly recommend Firefox to anyone interested as I think the improvements are significant, but I won’t stop using Opera as it already had many of these features, as well as unit conversion that happens automatically when you highlight a measurement. It also has a customizable, tiled home screen that I find to be very useful; it’s one of my favorite features of Opera and the one I use most often. The new Firefox has something similar, with a single row of six, or double row of twelve, small tiles representing your ‘top sites,’ which can be edited or added to, but not rearranged; ultimately, it’s not as configurable as Opera’s landing page, and very curiously its screenshot functionality is disabled there also.

Whichever you prefer, they are now both very good browsers, with the choice being one left up to the preference of the user. I haven’t used Firefox in forever, but I am very pleased to see it in this new form and I hope it continues to get better and better. As I mentioned earlier, I can wholeheartedly recommend it now, something I couldn’t do before, and I will likely use it as a back up browser in place of Chrome, my current backup. There are of course many other choices in browsers, including Vivaldi, Pale Moon (don’t let the terrible web page scare you), and Brave, to name a few, as well as the more well knowns, however here I was only comparing the new Firefox to my main choice. Speaking of which, I was just notified that an update is available for Opera! Here we go…

Bing does Halloween Right

Everyone knows I am a big fan of Microsoft. I always have been, even when their products were of questionable quality; what can I say, I like the company. But now, their products are masterfully designed and fully capable, a pleasure to use. Never mind they come with pricing to match, something that was once the sole domain of Apple (as were those other qualities, ahem).

Even so, I am not here to extol the virtues of Microsoft’s products or even software. No, I am here to compliment their masterwork on one of their services that, sadly, is often targeted for derision: Bing. Fan I am of Microsoft, and user of Bing, the fact is it simply doesn’t provide strong results when I do a search. It will give me results that are ten years old, not relevant to what I’m asking, or just plain bizarre. I’m no fan of Google, but I will say they do search better. So you’ll understand my elation when I can finally give Bing high praise for easily blowing Google out of the water at something search related.

Well not search related per se, but more their search page. This year, for Halloween 2017, Bing absolutely nails what a Halloween page should be, and destroys Google’s feeble video in the process. Score one for Bing! Their (almost) fully interactive homepage shows living portraits hanging on a staircase wall, and most of them have, shall we say, a unique personality when you click on them. You can view the page at any time of year by following this link, however if for whatever valid reason you have an aversion to Bing, you can also see it in the video below. Well done Bing! The fact you do Halloween right makes up for all the ineffective searches I’ve done with you over the prior year.

(I tried to research how specifically this page was created but found nothing. Probably because I searched on Bing. Still, I love the page, and I’ll always be a fan, Microsoft!).

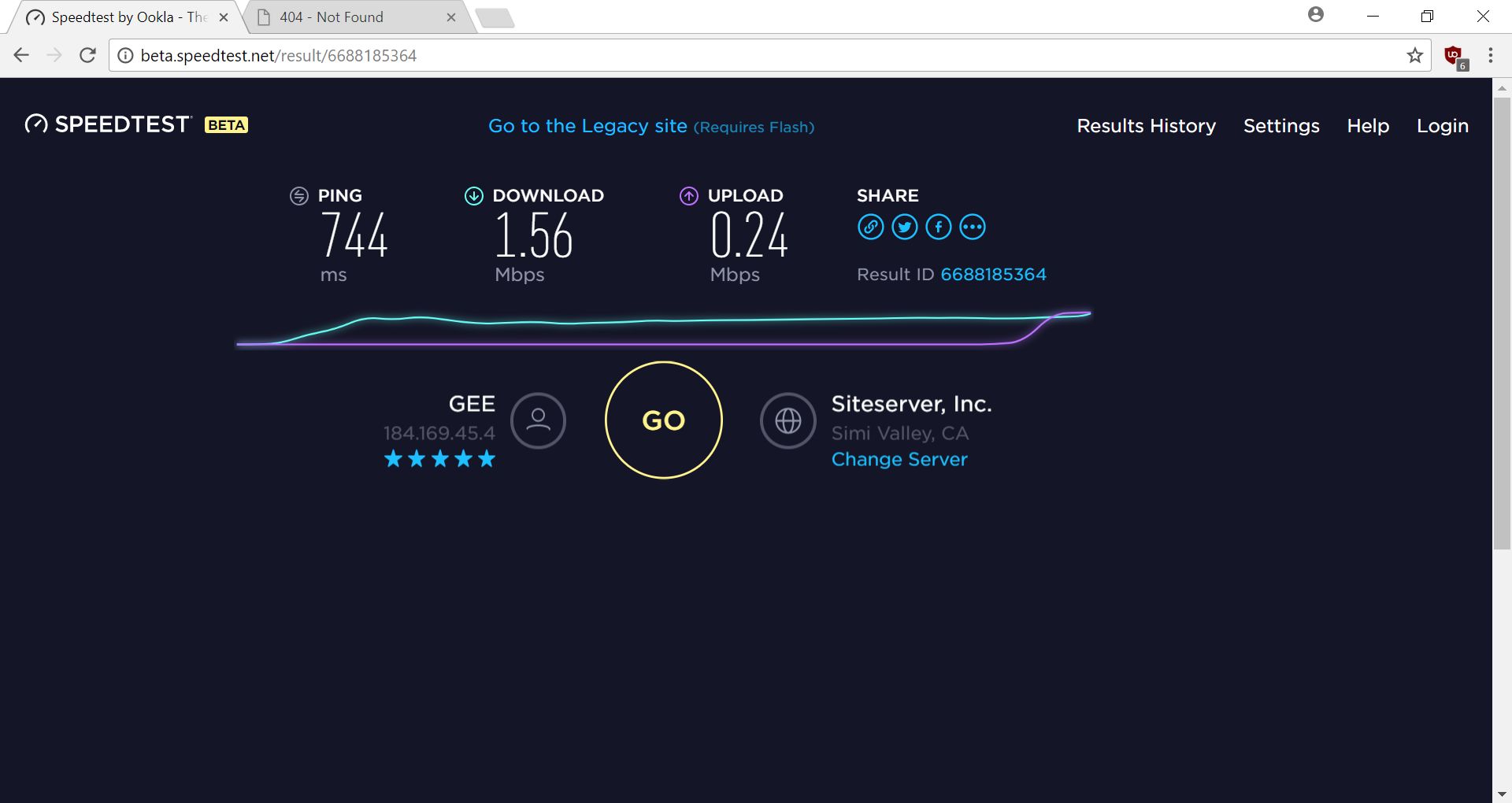

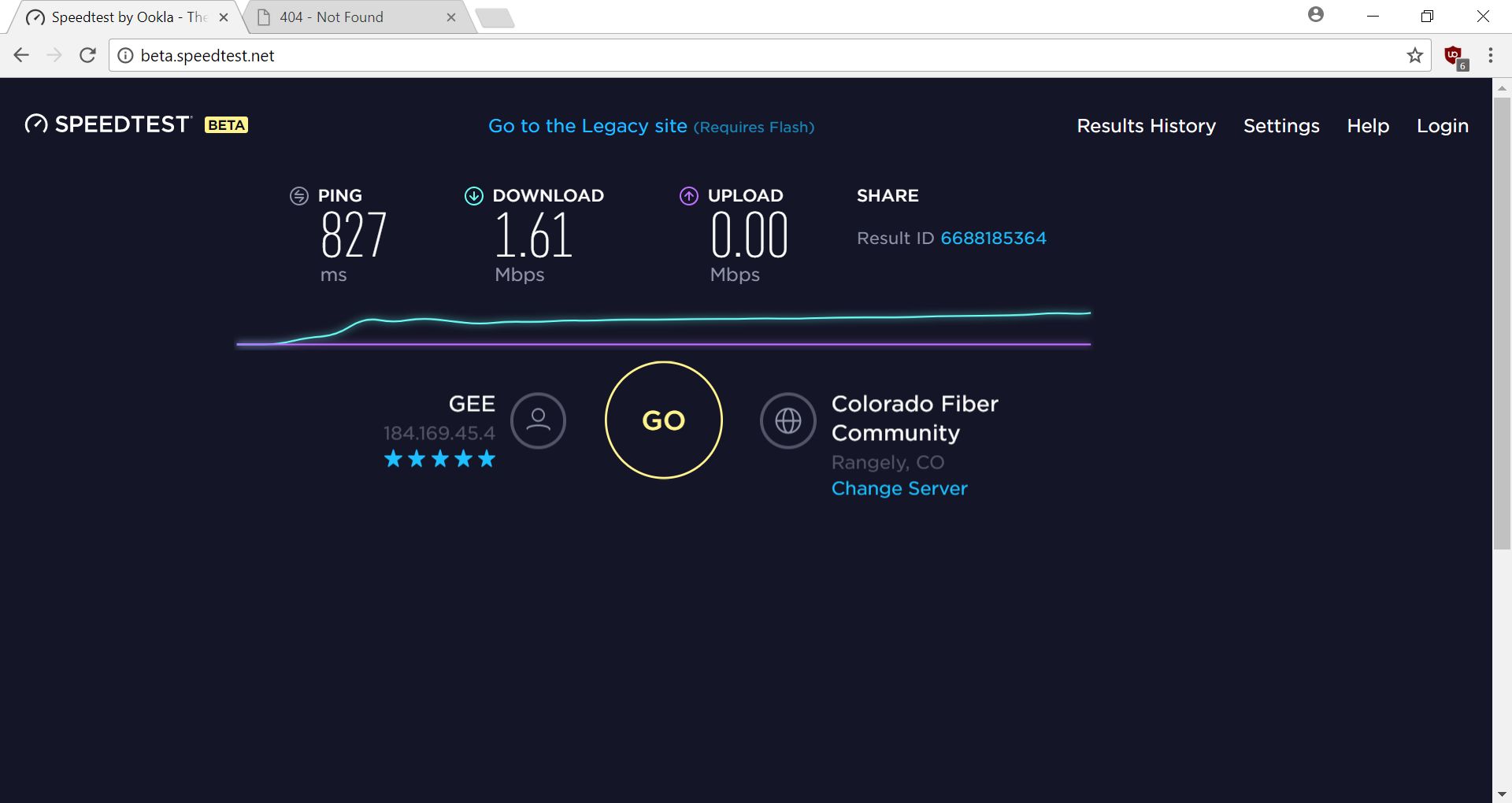

Here’s what wifi is like on Southwest

I’m 35,000 feet in the air, on a Southwest flight to Philadelphia, and I paid $8 for wifi access. To say it is underpowered is an understatement, but there is a surprising story – with a twist – to go along with that. Take a look at the images below, and marvel at the sub 2.0 speeds and, if you can believe it, at one point 0.0 upload speeds! I’ve never seen that before, and that was after I switched to a closer test server.

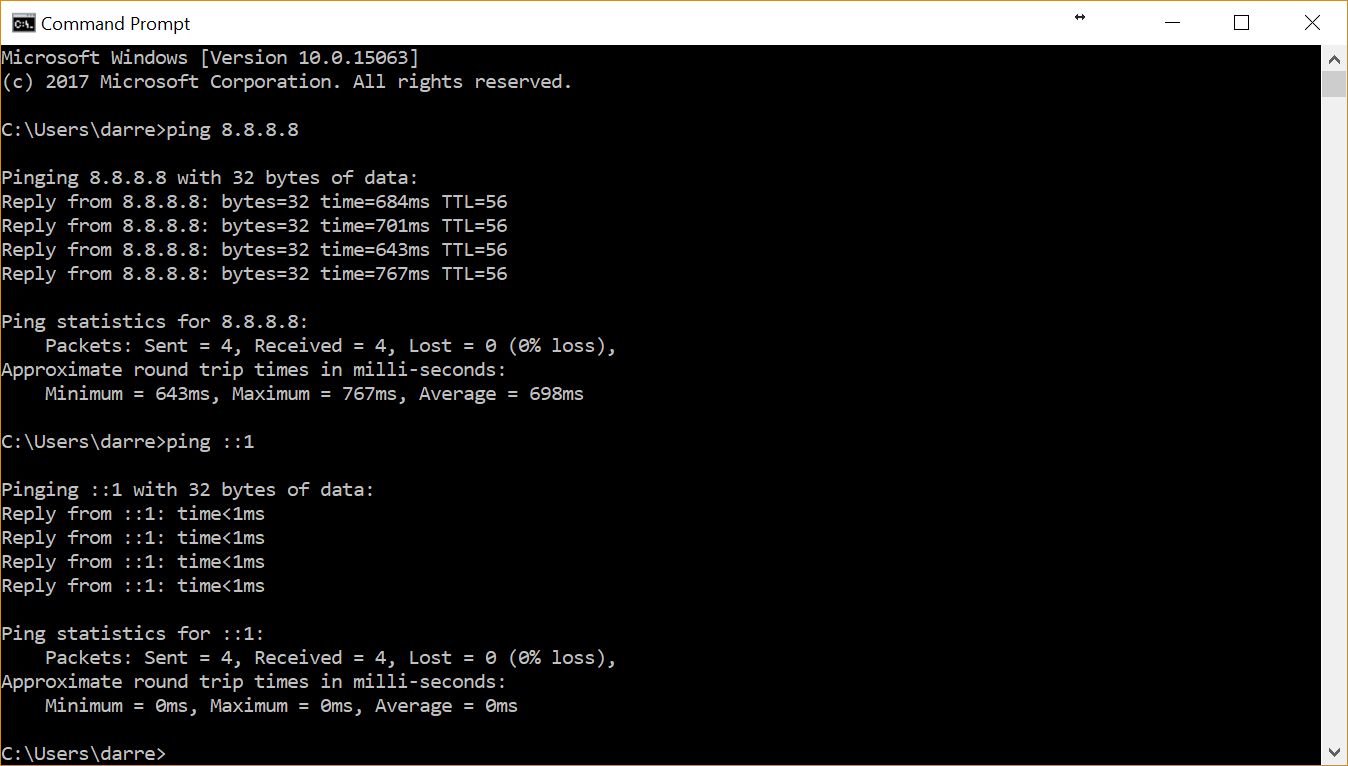

I pinged Google, which is 8.8.8.8, and had some pretty sorry pings there also. I had to ping the loopback just to be sure everything was copacetic on my own end.

What I find most interesting about all of this is that when I connected to my Plex server back home, I was able to stream Law and Order just fine. No skips, buffering, nothing. Sound lined up perfectly, video played very smoothly.

I figured that was because the file size of each episode is so small, approximately 350MB. So you can imagine my surprise when even 35GB Blu-Ray rips played perfectly as well, as seen below with a screenshot of Abraham Lincoln: Vampire Hunter streaming from my server at home to my laptop on this airplane.

I don’t know if that’s more a comment on the quality of my at-home Internet service, the server Plex runs on, or what. For such a crap speedtest, Plex served up video swimmingly.

What I consider to be even more amazing is that I was able to connect to vanilla World of Warcraft server Elysium with no troubles either. WoW has always been very efficient in terms of what happens at the client side versus what happens at the server side, but this is a private server, and I’m connecting via crap Southwest WiFi, so even thought it’s early WoW and efficient coding, I was still very pleasantly surprised at how well it ran.

You didn’t think I was going to let you see my character name now did you?

Even writing this post was easy and responsive. Honestly, I’m not sure what to make of this whole experience. What is easily the worst speedtest I’ve ever run did not manifest in terms of poor performance; quite the opposite, actually. Everything ran very smoothly, whether it was serving up video, playing WoW, or even writing this post. I am definitely going to investigate further to see if I can identify how the one doesn’t lead to the other, and I will update when or if I make any further discoveries.

I guess it was worth the $8 after all.

UPDATE: The flight back was not so productive. I’m writing this update on said flight, and while my speedtest and ping results are similar to the flight out, performance this time suffered badly after about an hour although I can’t determine the exact culprit. Plex worked for a while, then stopped (it came back while writing this update, but in fits and starts; it would buffer heavily between brief plays while Blu-rays were a non-starter. The problem there is it could be an issue server-side). Additionally, Facebook wouldn’t load and Warcraft exhibited noticeable lag. There are many reasons this could be happening, from the odd tracerrt I ran that pathed through SoCal, to the heavy WiFi use by everyone else on the plane (as illustrated by a heavily populated netstat result), to the weather, and beyond. Moral of the story: Use Southwest WiFi at your own eight-dollar risk.