Category Archives: People

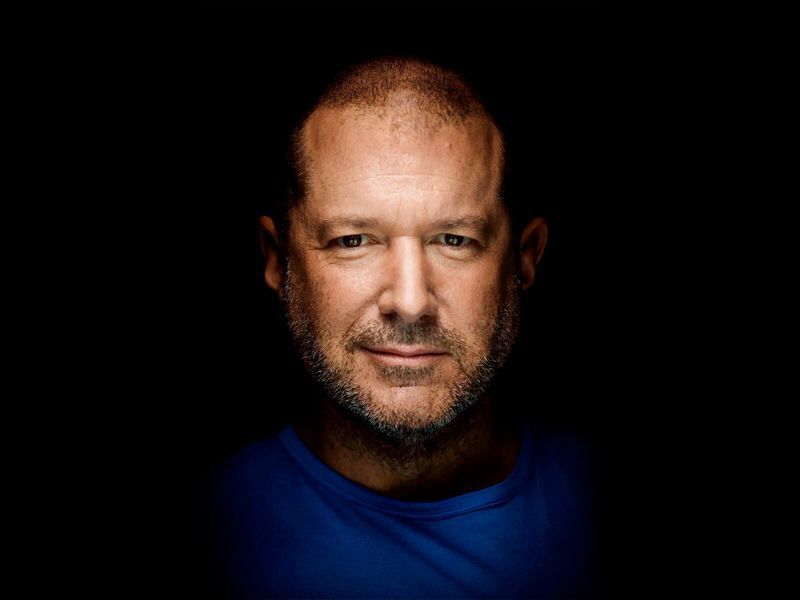

Jony Ive leaves Apple

As someone who teaches extensively about design as it intersects with technology, and is also a computer and technology historian, I am conflicted about Jonathan (Jony) Ive leaving Apple. Mainly because he’s not really leaving, however any sense of him doing so makes me think Apple will continue to move away from the designs for which it is so noted.

While he will no longer be part of Apple, he has decided to start his own design firm and will continue to contribute to and work with Apple. This seems like a very smart move, especially considering he was the creative force behind such behemoths as the Ipad, original and subsequent IMacs, everything in the IPod / IPhone line, Apple watch, and who could forget one of his first big projects, the TAM, or Twentieth Anniversary Macintosh, priced at an insane $7500 in 1997, but having many luxury amenities such as a leather wristwrest and no two being the same (none had the same startup chime or color, for example).

Not all of his ideas were a success; while the TAM was his first big contribution to Apple design, he had also worked on the Newton, which by the time he got involved was already flailing and clearly on its way out. In fact, it’s one of the first things killed off when Steve Jobs returned to save Apple. It was at the time of that return that Jobs asked Ive to stay on as a designer and help get Apple, who was in financial distress at the time, back on its feet. It’s well known that Jobs and Ive were aligned in terms of what design is and what it should be, and with the two of them working together the result is a company that is now one of, and often the, most highly valued companies not just in the world, but of all time.

In a bittersweet way, Ive’s leaving Apple signals the end of Steve Jobs’ influence in the company he helped found, which may be one of the reasons Ive has decided to now forge his own path. When Jobs returned to help the floundering company, and asked Ive to help him, a powerhouse was formed. With Jobs gone and Ive leaving, it is now the company that it is, and I fear for its future as it moves away from the design principles that made it what it is and into more services that may dilute its brand.

I have a deep and profound admiration of Apple, even as they seemed to have recently lost their way: A focus on subscription services and less of a focus on hardware and design, but they were the company that made computing and technology popular and sort-of accessible back in the day. Believe it or not, Apple, especially with their IIe line, was the computer to have for gaming and productivity, and you can still experience that through multiple online emulators such as VirtualApple.org, AppleIIjs, or using the AppleWin emulator and the massive disk image collection at the Asimov archive or Internet Archive.

They were instrumental in bringing design to what was other fairly mundane technological designs. Indeed, PCs of the day were commonly referred to as ‘beige boxes,’ because that’s just what they were. Have a look (images sourced from the vogons.org message board about showing off your old PCs, and has many other great pictures).

Side note: Surprisingly, although I consider myself design focused, I don’t hate these. Probably because of nostalgia and the many fond memories I have of the days of manually setting IRQs and needing to display your current processor speed, but nostalgia powers many things.

Side note number two: I actually went to the same high school as both Steve Jobs and Steve Wozniak; Homestead High in Cupertino.

So farewell to Jony and hopefully you give us many more outstanding designs in the future, farewell to the Jobs era of Apple as the company struggles creatively without him, and I am keeping hope alive that form and function in design will continue to reign.

Learn about PC and OS pioneer Gary Kildall, from the inside

In my classes and on this site, I talk a lot about history. To me, it isn’t possible to be genuinely good at something unless that skill is accompanied by a respectful understanding of what came before. Otherwise, how could true knowledge be claimed?

I hold that true for everything. For example, if one claims to be a guitar player but knows nothing about Les Paul or The Beatles, they’re not really a guitar player. They may play guitar, but guitar player they aren’t. Similarly, if one is a physician, but doesn’t know the groundwork laid by Louis Pasteur or Florence Nightingale or how they treated injuries during the Civil War, then I would question their qualifications and their true interest in the field; after all, if they don’t know the history of medicine, how interested in medicine could they really be? A true passion for something necessarily results in learning *about* that thing, and that includes history.

That’s why I talk about it so much. I’m always excited to learn a new little piece of computing history no matter how small; everything helps piece together the puzzle. It’s also why I’m a member of the Computer History Museum, and they recently released a heretofore unknown piece of history that is quite major. It’s the ‘first portion’ (about 78 pages) of an unpublished autobiography of one of the founders of the modern home-computing movement, Gary Kildall. You can read about it and download it here.

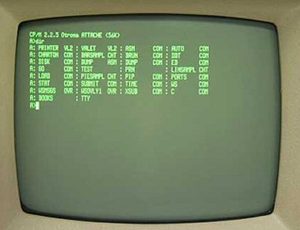

Gary Kildall developed the first true OS for what would become business and home computers, and he called it CP/M (Control Program for Microcomputers, and you can download the source code from the CHM here). There are many stories about him and his place in the early days of computing. The most common, the one his kids claim is false but has persevered and taken on a life of his own is that when IBM showed up at his front door to license his OS for use in their new line of PCs, he was out flying his plane and his lawyers advised him to not sign the NDA that was provided. IBM, not being a company to wait around, instead went right down the street to Microsoft and signed up with them instead. ironic, because they had approached Microsoft first who sent them to Kildall in the first place! Needless to say, the rest is history.

If you look at the screen of a PC running CP/M, you’ll notice that, and this isn’t a surprise, MS-DOS looks very much like it.

The truth to that story has always been questioned, but it is generally accepted as what happened. Microsoft had no OS when IBM first approached, which is why they recommended Kildall. However when IBM returned to Microsoft after the failed meeting, Bill Gates jumped at the opportunity and it was all over for CP/M. Gates became the richest person on earth, and Gary Kildall, sadly, faded into comparative obscurity. The fact is, for all his contributions to computing, there just isn’t very much known about him as a person. Even finding a decent header image was difficult.

That’s why it was very surprising to see the Computer history Museum recently make available a copy of the autobiography. Apparently, he had written it sometime back in the 70s and handed a copy of it out to a few friends and family noting it was intended to be published the following year. Needless to say that never happened, and the fact it existed remained a buried treasure ever since.

Being written by his own hand, and talking about the events behind the urban legend of IBM, Kildall, and Gates, it is a really fascinating read, giving insights into how things worked back at the dawn of the personal computer age. I found it especially interesting that even though he had once created a BASIC compiler, he – in his own words – detested BASIC. I didn’t know it was possible to feel that way about a computer language, but apparently he did. I was also struck that in the introduction to the memoir, Kildall’s children mention their father’s later struggle with alcoholism, and apparently that manifested in the writings and is the reason that those sections of the writings were not included in the release.

I am more than ok with that, though. What has been provided in this first portion is a fascinating narrative and perspective, one not seen before, into the mind of someone who deserves much more credit than he gets.

A couple of side notes: You can see Gary in many episode of The Computer Chronicles, an 80’s – 90’s show about technology that is a really interesting and compelling look into the what consumer technology used to be, and if you haven’t been to the website of the Computer History Museum, you really should give it a look. There is so much there to see; it’s incredibly informative.

Alvin Toffler passes away at 87

Alvin Toffler got it right. It was all the way back in 1970 when he published Future Shock, a book that predicted, with surprising yet not complete accuracy, the impact of technology on our future selves. From throwaway consumerism which was a spot-on prediction, to Rapture-esque underwater cities which was not so accurate, his vision of the impact of technology has mostly come true. FutureShock became a trilogy with two more books; The Third Wave and PowerShift.

He was also the first to coin the term ‘Information Overload,’ a term we use to this day, and one I use frequently in my classes, which refers to our inability to take in and process the overwhelming amount of information that continues to be created even today. The title of the book itself is a reference to what would become an inability for us to keep up with the fast pace of the future. He predicted that the family as a cohesive unit would continue to be tested as the busy nature of life and all that would go along with it would wedge itself between those familiar bonds.

He correctly foresaw the development of a new knowledge economy and information age, in which the ability to learn and adapt would become more important than the ability to maintain a trade skill, which we see in the creation of job titles such as ‘Knowledge Worker’ (itself a term introduced in 1966 by Peter Drucker). In his eyes, it would be the inability to adjust that would become the illiteracy of the new millennium.

Indeed, Alvin Toffler predicted technological development would completely reshape the fabric and structure of society, and we now know that he was right.

A true forward thinker.