Tag Archives: history

A win for digital preservation

As many of you know, I am a big fan of older software and systems, even maintaining a small collection of vintage computer systems and software. Indeed, it is the software that is important, as hardware without software is just hardware, nothing more. Every piece of hardware needs a killer app to run on it, or people don’t buy the hardware in the first place. It’s why game consoles need to have a robust software lineup available at launch, or else risk being left behind for the entire generation.

Thing is, preserving physical copies of software is easy, procedurally, anyway. You have the physical software, and you digitize it while preserving the physical copy itself, and it could be a permanent record. of course, there are issues with maintaining the software in a runnable state, both for the digital version (are there suitable emulators available?) and clearly for the physical version, which is subject to all kinds of risks including environmental and technical. There is the issue of bit rot, an ill-defined term which generally refers to either a physical medium being unusable because as technology advances, the hardware used to read it becomes obsolete, or the general lack of performance of a physical medium overall due to the aforementioned environmental or other factors.

Even with physical copies, issues of the grey-area legality of emulators is always front-and-center, with the real focus being on ROMs. Nintendo recently shut down long-time and much-loved ROM site EmuParadise simply by threatening legal action. Curious, considering EmuParadise has been around so long, but now Nintendo wants to start monetizing its older IPs, and EP might put a bee in that bonnet. Also, ‘shut down’ isn’t entirely true: they didn’t shut it down but the site no longer hosts ROMs, and even though there is still a lot of information, the actual games being lost is a big problem. I have to go further out of my way to find ROMs, even for games whose companies, platforms, sadly even developers are long gone.

So why does this all come up now? Because we are in an age now where much of the software, data, and information we have is in digital form, not the physical form of old, and this leads to huge problems for historians and archivists such as myself. If something is only available digitally, when the storefront or host on which it is available goes down, how will we maintain an archived copy for future generations to see and experience? I have a Steam library with, at the time of this writing, over 300 titles that are all digital. there is no physical copy. So, just for argument’s sake, let’s say Steam closes shop. What happens to all those games? those VR titles? That one DAW? Will they just vanish into the ether? Steam claims if they ever shut down, we’ll be able to download them all, DRM free. But will we? As the best answer in that earlier link states, Steam’s EULA that all users are required to accept, states that their games are licensed, not sold. That may seem strange, but it has actually been that way for a long time. Even with physical games, you don’t actually own them and what you can do them outside of simply play them is exceptionally limited.

So how do we preserve these digital-only games? Whether a tiny development house creating an app, or a huge AAA title developed by hundreds of people: if the company shuts down or the people move on or whatever happens, how will we access those games in the future, 10, 20, 50 years from now?

This becomes even more of an issue when we have games with a back-end, or a server-side component. The obvious example is MMOs such as Everquest and World of Warcraft. What happens when their servers shut down and the game can no longer be played? How do we continue to experience it, even if for research or historical purposes? If the server code is gone, having a local copy of the game does us no good. As many MMOs and other online games continue to shut down, the fear is they will simply fade into nothingness, as if they never existed, but their preservation as a part of the history of gaming and computing is important.

Some brave souls have tried running what are called ‘Private Servers,’ which is server code not run, supported, or authorized by the developing company. Again, the most common example are World of Warcraft private servers you can join, which are not running the current code, but earlier versions which people often prefer and which brings up another interesting issue regarding the evolution of these digital worlds: Even if a game is still going strong, as is WoW, how do we accommodate those who prefer an earlier version of the game, in this case known as vanilla, meaning with the original mechanics, structure, narrative, and other gameplay elements that were present on launch but have since been designed far out of the game? Vanilla WoW is not officially offered although that will apparently be changing, but many private servers are immediately shut down via Cease and Desist orders from Blizzard, with one being shut down the day it came online after two years of development. Others manage to hang on for a while.

At the risk of going off on too much of a tangent, the reason I’m talking about this and why it has taken me six paragraphs to get to my point is that there has been a semi-wonderful ruling from the Librarian of Congress that essentially maintains an already-written rule that if a legacy game is simply checking an authentication-server before it will run, it’s legal to crack that game and bypass the check procedure.

Additionally, while that small thing includes the ability to allow legacy server code to run and be made accessible, it specifically can not be done so by private citizens, only a small group of archivists for scholarly / scientific / historic / other related reasons, and most importantly, the server code has to be obtained legally. That may be the biggest hurdle of all, as it is well known some companies sit on games and IP long after their market value has faded, preventing them from being released or even reimagined by the public. I’m hoping this is the beginning of a renewed push for legal support to archive and utilize legacy code and legacy server code to continue to preserve not just software titles of all types, not just games (and the new ruling includes everything), but the interim forms they took throughout the course of their development.

One additional note that surprises me even to this day: There is one online game that while very popular was eventually shut down by its parent company. That game was Toontown Online, and it was developed by Disney, a company well-known for aggressively protecting its IP. So it is especially surprising, that when an enterprising teenager who missed the world they had created decided to run a free private server, even renaming it Toontown Rewritten, Disney let it go! It has been up and running for a good number of years, has seen many improvements, updates and additions other than its terrible log-in client, and runs quite well. Here’s a screenshot of its current state, and as you can see it is still popular.

It still uses Disney-owned names and imagery, and has the unmistakable Disney aesthetic – there’s even a Toontown in Disneyland! If you want to see the proper way to handle this whole issue, once your game is done and abandoned, let an enthusiastic team who is passionate about the project and treats it with respect take over. It makes goodwill for you, a positive experience for them, and ensures your creation will continue to live on.

Learn about PC and OS pioneer Gary Kildall, from the inside

In my classes and on this site, I talk a lot about history. To me, it isn’t possible to be genuinely good at something unless that skill is accompanied by a respectful understanding of what came before. Otherwise, how could true knowledge be claimed?

I hold that true for everything. For example, if one claims to be a guitar player but knows nothing about Les Paul or The Beatles, they’re not really a guitar player. They may play guitar, but guitar player they aren’t. Similarly, if one is a physician, but doesn’t know the groundwork laid by Louis Pasteur or Florence Nightingale or how they treated injuries during the Civil War, then I would question their qualifications and their true interest in the field; after all, if they don’t know the history of medicine, how interested in medicine could they really be? A true passion for something necessarily results in learning *about* that thing, and that includes history.

That’s why I talk about it so much. I’m always excited to learn a new little piece of computing history no matter how small; everything helps piece together the puzzle. It’s also why I’m a member of the Computer History Museum, and they recently released a heretofore unknown piece of history that is quite major. It’s the ‘first portion’ (about 78 pages) of an unpublished autobiography of one of the founders of the modern home-computing movement, Gary Kildall. You can read about it and download it here.

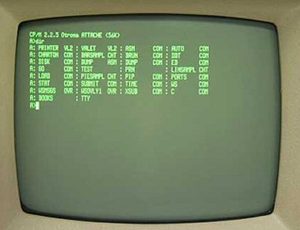

Gary Kildall developed the first true OS for what would become business and home computers, and he called it CP/M (Control Program for Microcomputers, and you can download the source code from the CHM here). There are many stories about him and his place in the early days of computing. The most common, the one his kids claim is false but has persevered and taken on a life of his own is that when IBM showed up at his front door to license his OS for use in their new line of PCs, he was out flying his plane and his lawyers advised him to not sign the NDA that was provided. IBM, not being a company to wait around, instead went right down the street to Microsoft and signed up with them instead. ironic, because they had approached Microsoft first who sent them to Kildall in the first place! Needless to say, the rest is history.

If you look at the screen of a PC running CP/M, you’ll notice that, and this isn’t a surprise, MS-DOS looks very much like it.

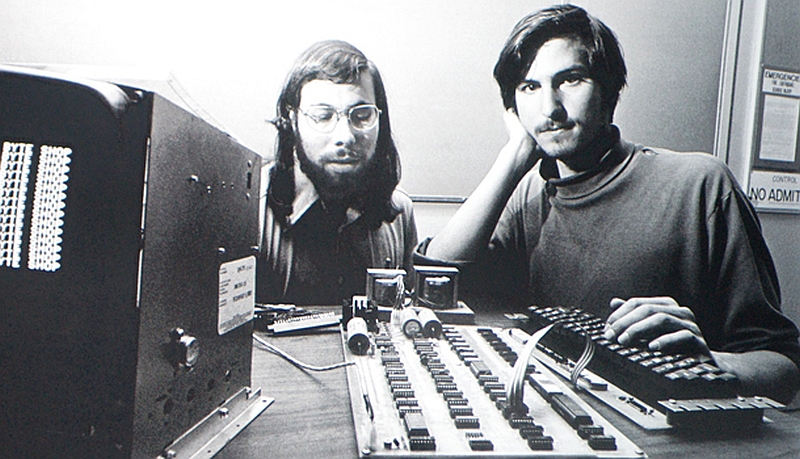

The truth to that story has always been questioned, but it is generally accepted as what happened. Microsoft had no OS when IBM first approached, which is why they recommended Kildall. However when IBM returned to Microsoft after the failed meeting, Bill Gates jumped at the opportunity and it was all over for CP/M. Gates became the richest person on earth, and Gary Kildall, sadly, faded into comparative obscurity. The fact is, for all his contributions to computing, there just isn’t very much known about him as a person. Even finding a decent header image was difficult.

That’s why it was very surprising to see the Computer history Museum recently make available a copy of the autobiography. Apparently, he had written it sometime back in the 70s and handed a copy of it out to a few friends and family noting it was intended to be published the following year. Needless to say that never happened, and the fact it existed remained a buried treasure ever since.

Being written by his own hand, and talking about the events behind the urban legend of IBM, Kildall, and Gates, it is a really fascinating read, giving insights into how things worked back at the dawn of the personal computer age. I found it especially interesting that even though he had once created a BASIC compiler, he – in his own words – detested BASIC. I didn’t know it was possible to feel that way about a computer language, but apparently he did. I was also struck that in the introduction to the memoir, Kildall’s children mention their father’s later struggle with alcoholism, and apparently that manifested in the writings and is the reason that those sections of the writings were not included in the release.

I am more than ok with that, though. What has been provided in this first portion is a fascinating narrative and perspective, one not seen before, into the mind of someone who deserves much more credit than he gets.

A couple of side notes: You can see Gary in many episode of The Computer Chronicles, an 80’s – 90’s show about technology that is a really interesting and compelling look into the what consumer technology used to be, and if you haven’t been to the website of the Computer History Museum, you really should give it a look. There is so much there to see; it’s incredibly informative.

Trumph of the Nerds

You may remember my mentioning in class that I was considering having movie screenings for tech-related movies that also happened to be good movies. That would include titles such as TRON (the original, not that G-d-awful remake), WarGames, Hackers, Her, Minority Report, and a couple of Simpsons and Futurama episodes for starters. If you’re wondering, I’m working with the legal standing that it all falls under the ill-defined idea of fair use since it’s being shown, ostensibly, for educational purposes.

I was going to do all that using a site called cytu.be, however I am still wrestling with it. Therefore, I thought in the meantime, and since we have a couple of weeks before we will be gathering again in class, I would present to you a documentary about the history of the personal computer and the industry that grew up around it, called Triumph of the Nerds.

It’s not the greatest name, I know. It’s even derogatory in parts, although some of the characters live up to the title. And Robert Cringely who wrote and narrates the whole thing certainly means no harm. In fact, Bob Cringely is the well known (and fake) name of long-time technology writer Mark Stephens, but the Bob Cringely name has actually been owned by many people and in fact two people are using it as pseudonyms right now!

A return to Antikythera

I always try to inject the history of technology into every class, however for me the history of technology isn’t just about its digital offshoot, but rather technology in general. After all, technology – in a general sense – is using what you know to achieve a goal. I’m as interested in the Oldawan and Acheulean toolmaking industries, the abacus, the slide rule, the ENIAC, and the Analytical Engine as I am in Apple, artificial intelligence, space travel, and bionics.

That is why I was overjoyed to see this story on Sky news, stating that divers will be using the most advanced diving suits ever made to search out the rest of the components of the fabled Antikythera mechanism (that’s a picture of the original up above).

This is really two tech stories in one: The first is the development and use of the diving suits, and the second is the search for the remaining components of the mechanism. I’ll start with the suit.

The exosuit, as it’s called, is a pretty impressive device. It reminds me of a modern version of the Big Daddy from Bioshock. As the article states it’s fully articulated at the joints allowing for full movement, has claws for grasping whatever treasure the diver may find, transmits video via fiber optic cable, and control can even be taken over by people topside in case the diver is rendered incapable. It can operate at 1000 feet, and because it’s fed by cables the diver can stay down as long as necessary. I believe it’s one of the indicators of the future of deep exploration, a great step forward.

But the real story here is the search for the remainder of the mythical Antikythera mechanism. This is a long, involved story so I will try to be brief, yet complete.

In 1901, divers off the coast of Antikythera, Greece, discovered among an ancient wrecked ship what appeared to be a complex, gear-driven device that turned out to be around 2000 years old. It took scientists over 100 years to determine what it was, and how it worked. Eventually it was discovered that this device was used to track all sorts of information in intricate detail: The movement of the stars and planets, tides, predict when both solar and lunar eclipses would take place to name but a few, and it did so accurately to the second. It even accounted for leap years! It is believed by most to have been built by fabled mathematician Archimedes.

It was so advanced that nothing like it had been seen up to that point, and it took another 2000 years (that’s two entire millennia!) before anything even came close to it again. Some say it’s the first analog computer ever made, but simpler machines such as the previously-mentioned abacus could be classified similarly.

The Antikythera mechanism, after extensive investigation and input from many specialists, not to mention many, many years, was slowly recreated digitally and pieced back together. This new dive, then, is incredibly exciting, as it will hopefully piece together some of the lingering questions that still remain. It is my opinion that, with all due respect to the marvelous creations developed throughout computing history, the Antikythera mechanism – because of the time of its development and the precision of its functions – is the single most amazing piece of technology ever created.

I have embedded two full-length documentaries below that examine the device, its restoration and recreation, and the current state of knowledge in regards to it. The first is from the Ancient Discoveries series, the second is from NOVA. They’re both incredibly interesting, you won’t be disappointed!