Tag Archives: Artificial Intelligence

I warned you

A couple of classes ago, we talked about the memristor being developed by Hewlett Packard. We mentioned it doesn’t work on straight binary, or the on-off paradigm of current transistors used in computer chips, and that would allow for vastly increased storage and speed, instant boots, and much lower power consumption.

We also discussed that there were claims it would give machines a low level of sentience, or self-awareness – remember our discussion of Black Widows? So in the spirit of our discussion of the memristor and its implications, I found this article over on CNN that not only discusses the memristor, but also reiterates all the concerns and issues we discussed in class.

Worm’s brain in Lego robot

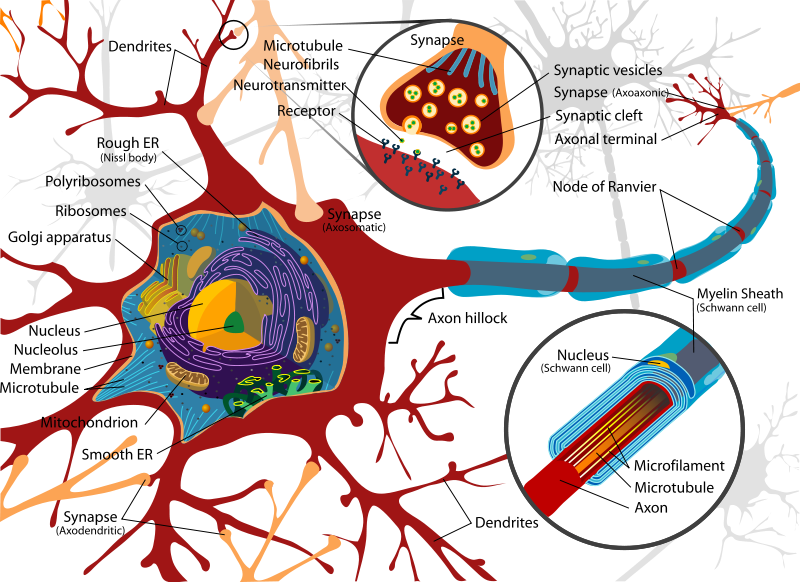

What a time to be alive! In class on Friday, we had a tantalizing foreshadowing of fascinating topics for next class such as Artificial Intelligence, Neural Networks, Genetic Algorithms and Intelligent Agents among others, and in our preliminary discussion about them, it was brought up that researchers had implanted the brain of a worm into a robot made of Legos.

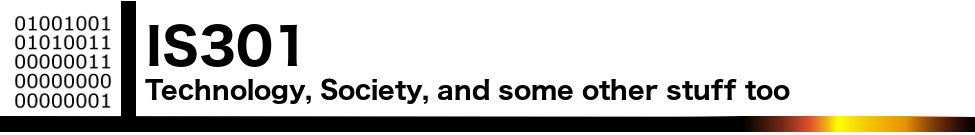

Well, not really. Although it sounds remarkable (and it is), the link between a computer and a brain is not so far off. Computers are sort-of brains, and our brains are computers. Just like a digital machine, our brains rely in large part on electrical activity known as the action potential and the ability to carry that potential down a path of interconnected neurons, so transferring the behavior of a brain, if not the ability to cognate, into a machine shouldn’t be the insurmountable task it may at first seem.

So to be clear, the researchers, members of the groundbreaking OpenWorm project (download their app!), did not transfer the *actual brain* of the worm into the Lego-bot. They recreated its activity in software, simulating its low-level stimulus-response actions into programming code. Once that was complete, they were able to stimulate the robot in various ways, and it would respond in the same way the worm would respond. As stated in the post over on Smithsonianmag.com, when the nose of the robot was stimulated, forward motion ceased, just as it would in the worm.

What’s amazing is that none of that behavior was programmed in. It’s a result of them programing the structure of the neurons, so that the behavior is a *result* of the programming, not *because* of the programming.

Here is a video of the robot and its resultant behavior watch, and be amazed (Thanks to Dane for the subject and the link).

Stephen Hawking’s speech system gets an upgrade, comes with dire warning

This week there are two important stories, both relating to Stephen Hawking and to topics we have discussed previously in class. The first involves an upgrade to the system he uses to communicate, and the second is a dire warning from the man himself.

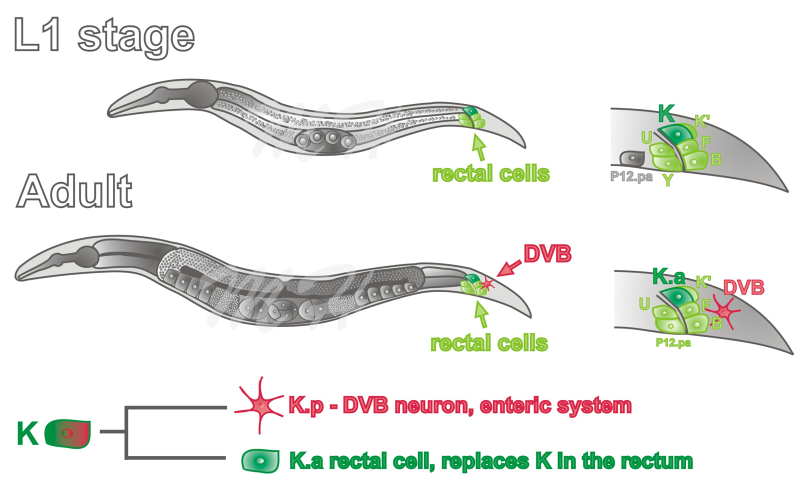

First, regarding the system he uses to communicate. For the first time in twenty years, it has received a major overhaul in how it operates. The story was all over the place, however none of the articles about it did a very good job in discussing how it actually works. Instead, they all mentioned a cheek-operated switch attaches to an infrared sensor on his glasses that lets him use the system. That’s a non-explanation, and even the person involved directly with the process did a terrible job of explaining it as you can see in the video on this USA Today page, so hopefully I can add some additional detail that will help explain how it operates.

It’s true that because of his affliction he only has relative control over the muscles in his cheek, and that is how he interacts with his PC. Via an infrared switch on his cheek, which communicates with an infrared receiver on his glasses that then sends a signal to a USB key on the bottom of his wheelchair that then communicates the choice to the PC, he can make selections on a screen. You can see the sensor in his picture below, and it works like this: